Research from Deloitte indicates that only 23 percent of organizations feel fully prepared to manage AI-related risks, leaving significant gaps in oversight and security.

What is AI Governance?

AI governance is the system of policies, frameworks and oversight that directs how artificial intelligence is developed, used and regulated. It ensures AI operates with transparency, accountability and security across organizations, industries and governments.

Safeguarding AI systems so that they can operate securely, ethically, and in compliance with regulations has become a top priority. Without proper governance, AI systems can introduce bias, violate regulatory requirements, or become security risks.

For example, an AI recruitment tool trained on historical hiring data may inadvertently prioritize certain demographics over others, reinforcing discriminatory patterns. In sectors like healthcare or finance, deploying AI without complying with regional data protection laws can result in regulatory violations and substantial fines.

Additionally, AI models integrated into public-facing services without adequate threat prevention controls can be exploited through malicious exploit attempts, exposing organizations to cyberattacks and operational disruption.

Key Principles of AI Governance

A well-defined AI governance strategy incorporates essential principles that help organizations maintain control over AI-driven decision-making. According to a Survey by the Ponemon Institute, 54 percent of respondents have adopted AI, while 47 percent of security teams reported concerns about vulnerabilities introduced by AI-generated code.

The following foundational principles are central to effective AI governance and help mitigate both operational and security risks:

Security protocols protect AI models from adversarial attacks, unauthorized modifications, and emerging cyberthreats

Accountability ensures that AI systems have designated oversight, preventing unregulated decision-making and reinforcing human control

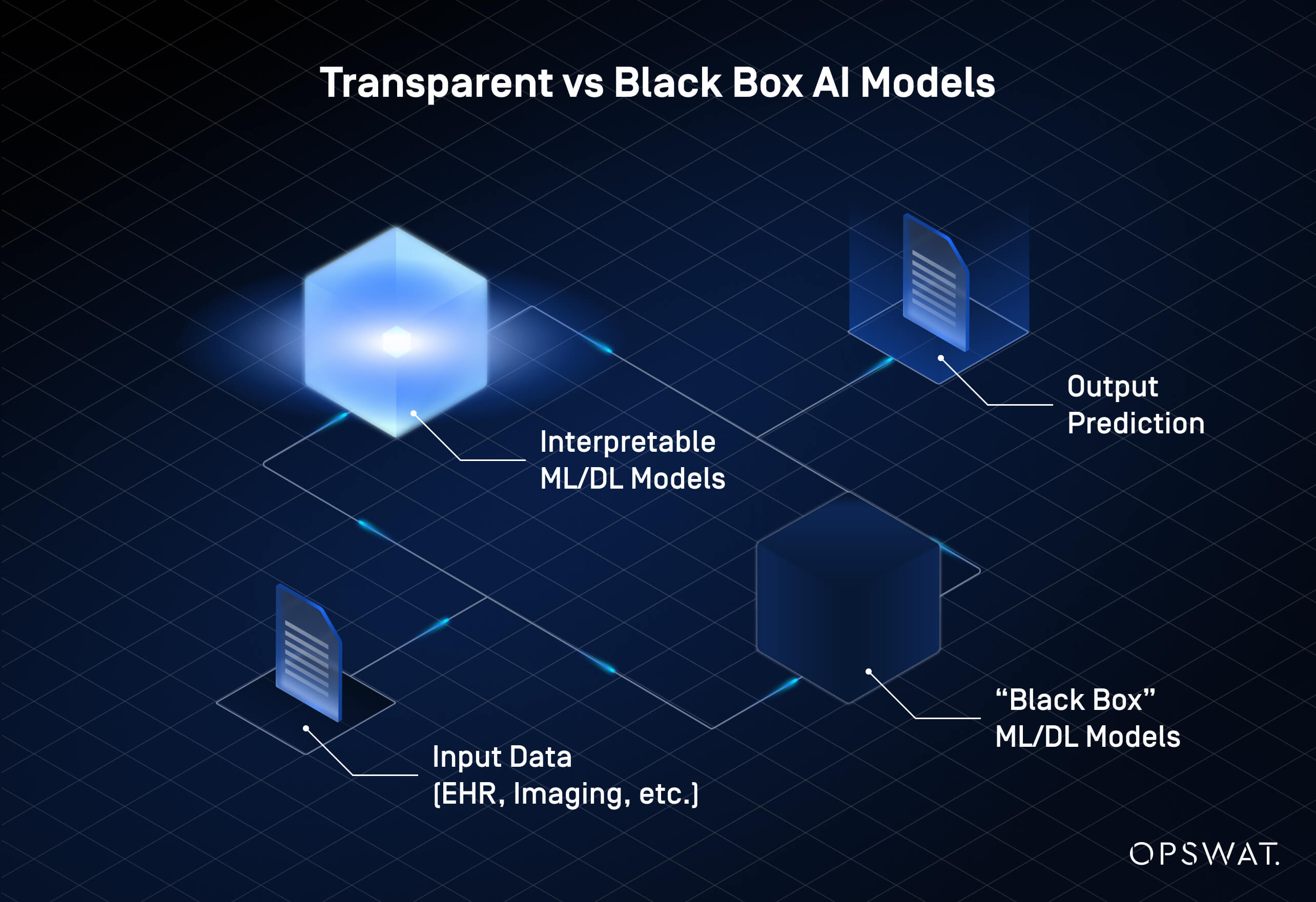

Transparency requires AI models to provide clear insights into their decision-making processes, reducing the risks of black-box models that lack interpretability

By embedding these principles into governance frameworks, organizations can mitigate risks while maintaining the efficiency and scalability of AI technologies.

The Growing Need for AI Risk Management

The rapid adoption of AI has introduced new challenges in risk management and compliance. Without adaptive strategies, organizations risk falling behind in addressing emerging threats and regulatory pressures.

AI risk management focuses on:

- Aligning governance strategies with evolving regulations and industry-specific AI oversight mandates ensures organizations meet legal requirements

- Ongoing bias detection and fairness audits are necessary to prevent discriminatory patterns in AI decision-making

- AI-enhanced phishing attacks, deepfake fraud, and model manipulation are growing concerns, requiring proactive security measures

To stay ahead of these risks, organizations must embed AI governance into broader risk management frameworks, ensuring that compliance, security and ethical considerations remain integral to AI development and deployment.

AI Governance Frameworks

A structured AI governance framework helps organizations navigate compliance requirements, manage risks and integrate security measures into AI systems. Incorporating a clear security governance model into AI risk management frameworks reduces fragmentation of AI initiatives and strengthens compliance coverage.

Framework Development

Developing an AI governance framework requires a strategic approach that aligns with organizational policies and regulatory standards. The following elements represent core components of an effective framework development process:

Risk Assessment

Identify vulnerabilities in AI models, including bias, privacy concerns, and security threats.

Regulatory Integration

Ensure AI governance aligns with industry-specific requirements and global standards.

Cross-Functional Collaboration

Create a governance framework that addresses both ethical concerns and operational needs.

Integration with Existing Organizational Policies

AI governance should not function in isolation but instead align with broader corporate policies on cybersecurity, ethics and risk management. Incorporating AI policies into enterprise risk management ensures AI models comply with security protocols and ethical guidelines.

AI auditing mechanisms can help detect governance failures early, while compliance tracking allows organizations to stay ahead of evolving regulations. External partnerships with AI vendors and security providers can also enhance governance strategies, reducing risks associated with third-party AI solutions.

Implementation Strategies

Successful AI governance requires a structured implementation approach, leveraging both technology and policy-driven strategies. Many organizations are turning to AI-powered compliance tools to automate governance processes and detect regulatory violations in real time. Key implementation measures include:

- Technology-driven compliance solutions use AI to track policy adherence, monitor risk factors, and automate auditing procedures

- Change management strategies ensure that AI governance policies are adopted across the organization

- Incident response planning addresses AI-specific security risks, ensuring organizations have proactive measures in place to manage governance failures, cyberattacks, and ethical violations

By embedding governance frameworks into existing policies and adopting structured implementation strategies, organizations can ensure AI systems remain secure, ethical, and compliant.

Ethical Guidelines and Accountability

As AI systems influence high-stakes decisions, organizations must establish ethical guidelines and accountability structures to ensure responsible use. Without governance safeguards, AI can introduce bias, compromise security, or operate outside regulatory boundaries.

Establishing Ethical Guidelines

Ethical AI governance focuses on fairness, transparency and security. The following practices are essential for building an ethical foundation in AI development and deployment:

- Principles and standards for ethical AI help ensure AI operates within acceptable boundaries, avoiding unintended consequences

- Developing a code of ethics formalizes responsible AI use, setting clear guidelines on transparency, data privacy and accountability

Creating Accountability Structures

To ensure AI governance is enforceable, organizations need mechanisms that track compliance and enable corrective action. Common accountability measures include:

- AI audits assess model performance, compliance, and security vulnerabilities

- Incident response planning prepares organizations to address AI-related failures, security breaches and governance lapses

By embedding ethical guidelines and accountability measures into governance frameworks, organizations can manage AI risks while maintaining trust and compliance.

Regulatory Frameworks

Compliance with regulatory frameworks is critical, yet many organizations struggle to keep pace with evolving policies. According to Deloitte, regulatory uncertainty is a top barrier to AI adoption, with many businesses implementing governance structures to address compliance risks.

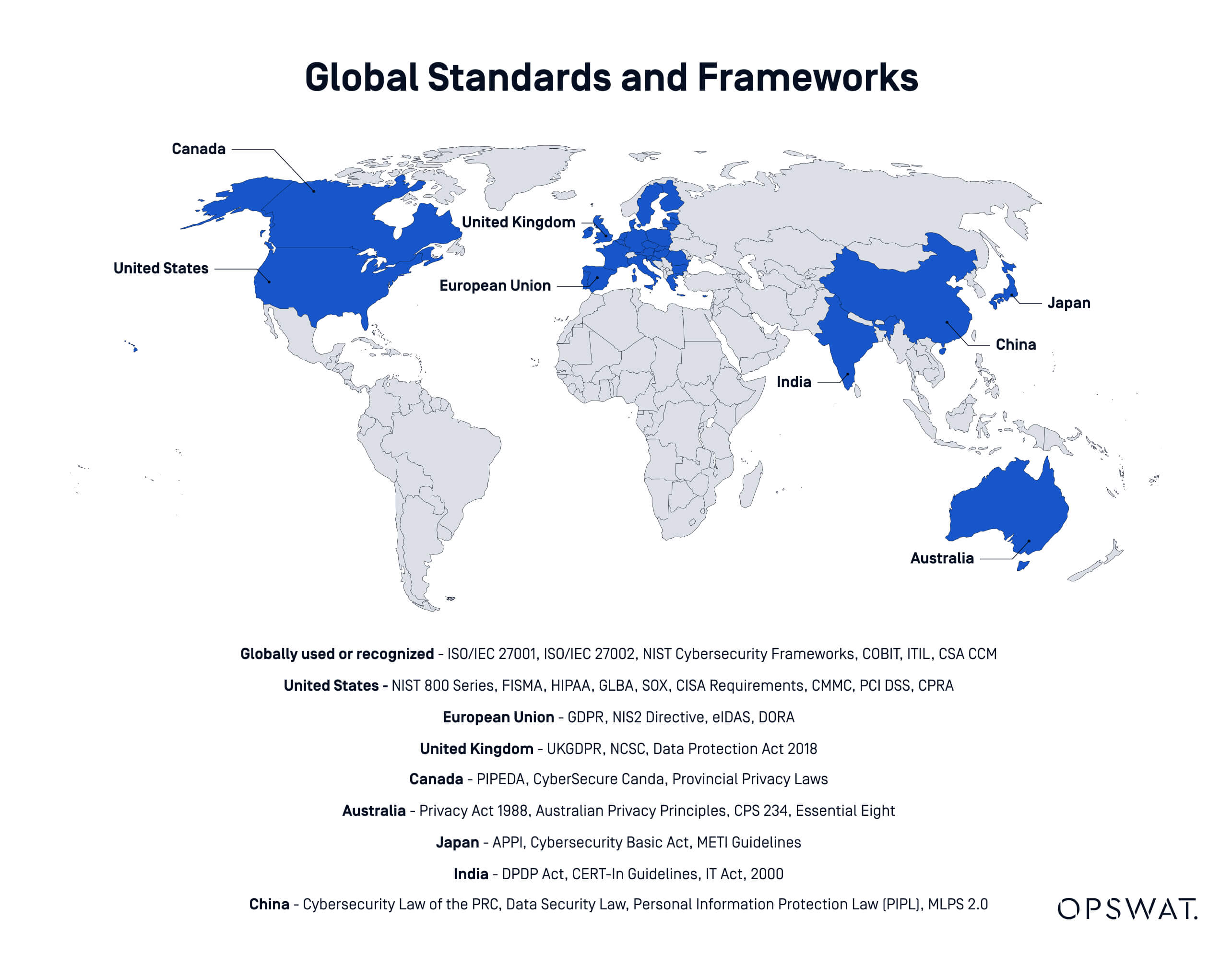

Overview of Global Regulations

AI governance is shaped by region-specific laws, each with distinct compliance requirements. The examples below illustrate how regulations vary across key jurisdictions:

- The EU AI Act establishes strict oversight, requiring transparency, risk assessments, and human oversight for high-risk AI applications. According to Article 6, AI systems are considered high-risk if they operate in critical infrastructure sectors. Organizations operating in the EU must align their AI policies with these guidelines.

- The United States SR-11-7 sets risk management expectations for AI in financial institutions, focusing on model validation, governance, and security controls. Similar sector-specific guidelines are emerging across industries.

- Other international policies, including regulations in Canada, Singapore, and China, emphasize ethical AI use, consumer protection, and corporate responsibility. Businesses must track regulatory developments in regions where they deploy AI.

By embedding ethical guidelines and accountability measures into governance frameworks, organizations can manage AI risks while maintaining trust and compliance.

Compliance Strategies

Ensuring compliance with AI regulations requires a proactive approach. The following strategies help organizations align governance frameworks with evolving legal requirements, especially when working with high-risk AI systems:

- Building a compliance team allows organizations to manage regulatory risks, oversee AI audits, and implement required governance measures

- Navigating regulatory challenges involves continuous monitoring of policy changes while adapting governance structures in a complex environment of often overlapping regulations

- Regulatory audits and reporting help organizations demonstrate compliance and prevent legal risks via proactive monitoring

By embedding compliance strategies into AI governance frameworks, organizations can mitigate regulatory risks while ensuring AI remains ethical and secure.

Transparency and Explainability

Organizations face increasing pressure to make AI-driven decisions explainable, particularly in high-risk applications such as finance, healthcare, and cybersecurity. Despite this, many AI models remain complex, limiting visibility into how they function.

Designing Transparent AI Systems

AI transparency involves making decision-making processes understandable to stakeholders, regulators, and end users. The following approaches support AI explainability and help mitigate the risks associated with so-called black-box AI models whose internal workings are not readily understandable:

- Effective communication strategies help organizations translate AI decision-making into clear, interpretable outputs. Providing documentation, model summaries and impact assessments can improve transparency.

- Tools and technologies for transparency ensure explainable AI by offering insights into how AI models process data and generate outcomes. AI auditing tools, interpretability frameworks and explainable AI (XAI) techniques help mitigate concerns around black-box decision-making.

By prioritizing transparency, organizations can improve regulatory alignment, reduce bias-related risks, and build trust in AI applications.

Monitoring and Continuous Improvement

AI governance is an ongoing process that requires continuous monitoring, risk assessment and refinement to ensure security and compliance. As AI-driven systems handle increasing volumes of sensitive data, organizations must establish secure workflows to prevent unauthorized access and regulatory violations.

Managed file transfer solutions play a critical role in AI policy enforcement, maintaining auditability and reducing compliance risks in AI-powered data exchanges.

Performance Metrics and Feedback Loops

Tracking AI system performance is essential for ensuring reliability, security and compliance. The following practices contribute to robust monitoring and adaptive governance:

- Secure data workflows prevent unauthorized access and ensure that AI-driven data exchanges follow strict security policies. OPSWAT’s MetaDefender Managed File Transfer (MFT)™ enables organizations to enforce encryption, access controls and automated compliance monitoring to reduce the risk of data exposure.

- Adaptive governance mechanisms allow AI models to refine decision-making based on continuous feedback while maintaining strict security and compliance measures. AI-driven security solutions, such as Managed File Transfer platforms, help classify sensitive data in real time, ensuring adherence to evolving regulatory requirements.

Building Risk Frameworks for Continuous Improvement

AI-powered workflows must be continuously evaluated for vulnerabilities, particularly as cyberthreats evolve. According to the World Economic Forum, 72 percent of asked organizations reported a rise in cyber risks over the past year, driven by increases in phishing, social engineering, identity theft and cyber-enabled fraud. The following strategies support long-term resilience:

- AI-driven security enforcement protects AI data exchanges by integrating advanced threat detection, data loss prevention and compliance controls. MetaDefender Managed File Transfer (MFT)™ ensures organizations can securely transfer AI-sensitive data without increasing regulatory exposure.

- Automated compliance enforcement streamlines regulatory adherence by applying predefined security policies to all AI-related file transfers. By leveraging AI-powered governance solutions, organizations can reduce the risk of data breaches while maintaining operational efficiency.

By embedding secure data workflows into AI governance strategies, organizations can enhance security, maintain compliance, and ensure the integrity of AI-driven decision-making. Solutions like MetaDefender Managed File Transfer (MFT) provide the necessary safeguards to support secure and compliant AI operations.

MetaDefender Managed File Transfer (MFT) – AI Security and Compliance Solution

MetaDefender Managed File Transfer (MFT) plays a crucial role in AI security governance by providing:

- Policy-enforced file transfers that automatically apply security controls, such as access restrictions, encryption requirements and compliance validation

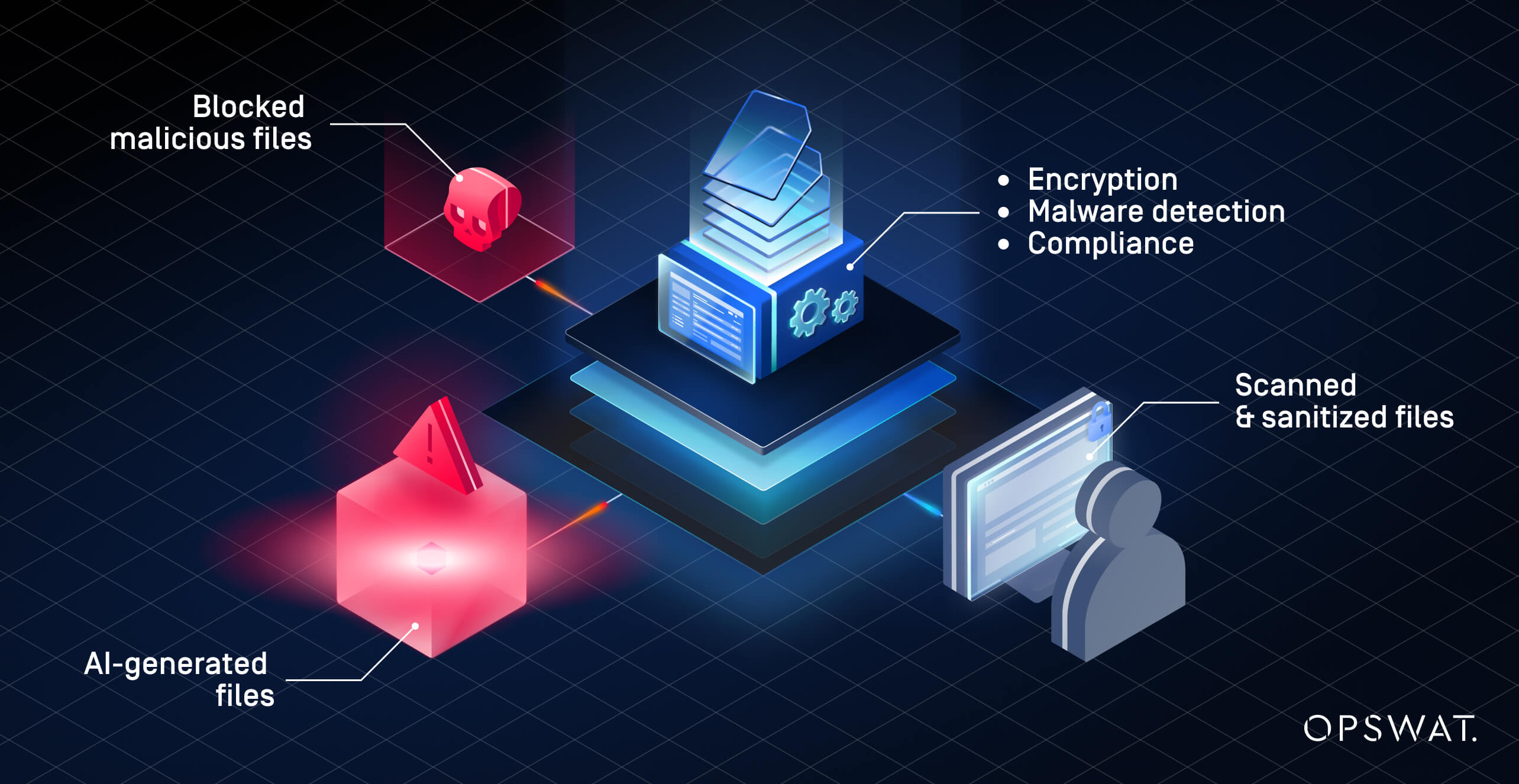

- Advanced threat prevention through layered detection that identifies and blocks malware, ransomware, embedded scripts and other file-based threats commonly used in AI-generated attacks

- Compliance-driven security measures that support regulatory mandates including GDPR, PCI DSS and NIS2 by integrating audit logging, role-based access controls and customizable policy enforcement

- Secure, managed data workflows that ensure AI-driven file transfers are encrypted (AES-256, TLS 1.3), integrity-verified and protected from manipulation or injection of malicious payloads throughout the exchange process

- By integrating Multiscanning with heuristic and machine learning engines, Deep CDR™ Technology and AI-powered sandboxing technologies, MetaDefender Managed File Transfer (MFT) protects AI-generated data from evolving cyberthreats while ensuring adherence to AI governance regulations.

Securing AI-Driven Data Workflows

AI models rely on large volumes of data that often move between multiple systems, making secure data transfers essential. Without proper controls, AI-generated and AI-processed data can be vulnerable to tampering, unauthorized access or compliance violations.

MetaDefender Managed File Transfer (MFT) ensures that AI-powered data workflows remain protected through:

- End-to-end encryption using AES-256 and TLS 1.3, securing data both in transit and at rest

- Strict authentication and access controls with Active Directory integration, SSO (single sign-on) and MFA (multi-factor authentication) to prevent unauthorized data exchanges

- Data integrity verification through checksum validation, ensuring that AI-generated files are not tampered with during transfers

By enforcing these security measures, organizations can safely integrate AI-driven processes into their existing infrastructure without exposing sensitive data to risks.

AI-Powered Threat Prevention

AI-generated content introduces new security challenges, including adversarial AI attacks, embedded malware and file-based exploits. MetaDefender Managed File Transfer (MFT) strengthens security through multiple layers of protection, preventing AI-powered cyberattacks before they reach critical systems.

Metascan™ Multiscanning technology leverages 30+ anti-malware engines to detect known and zero-day threats, ensuring that AI-generated files are free from malicious payloads. Learn more about this technology here.

Deep CDR™ Technology removes hidden threats, stripping active content from files while preserving usability—a critical step for preventing AI-generated exploits. Read more how it works here.

MetaDefender Sandbox™ detects evasive malware by executing suspicious AI-exchanged files in an isolated environment, analyzing their behavior to uncover threats undetectable by traditional security measures. Read what customers say about this technology here.

These capabilities make MetaDefender Managed File Transfer (MFT) a comprehensive security solution for organizations that rely on AI-driven data exchanges while needing to prevent malware infiltration and compliance violations.

Compliance-Driven AI Governance

AI-generated data is subject to strict regulatory oversight, requiring organizations to implement security policies that ensure compliance with evolving legal frameworks. MetaDefender Managed File Transfer (MFT) helps businesses meet these requirements by integrating proactive compliance controls into every file transfer:

- Proactive DLP™ scans AI-generated files for sensitive content, preventing unauthorized data exposure and ensuring compliance with regulations such as GDPR, PCI DSS and NIS2

- Comprehensive audit logs and compliance reporting provide visibility into AI-related file transfers, allowing organizations to track access, modifications and policy enforcement

- RBAC (role-based access controls) enforce granular permissions, ensuring that only authorized users can access or transfer AI-related files in accordance with governance policies

With these governance capabilities, MetaDefender Managed File Transfer (MFT) not only secures AI data but also helps organizations align with regulatory requirements, reducing legal and operational risks associated with AI-driven processes.

Strengthen AI Security with MetaDefender Managed File Transfer (MFT)

AI-driven data workflows require robust security and compliance controls. MetaDefender Managed File Transfer (MFT) provides advanced threat prevention, regulatory compliance, and secure data exchange solutions for AI-driven environments. Learn more about how OPSWAT's industry-leading managed file transfer solution, MFT can enhance your AI governance strategy.