Managed file transfer solutions are one of the basic services every modern application needs to offer. Whether it's provided via integrations with other services or simple personal file uploads, threat detection can help protect user and application data.

In this article, we will demonstrate how organizations can greatly reduce the threat of malware infection (or infiltration) using the MetaDefender Cloud public API in a serverless architecture.

What is serverless computing?

The word serverless doesn't mean "no servers"; it is an event-driven application design in which resources are provided and fully managed by the platform. Applications, or functions as a service (FaaS), are triggered and run under managed serverless computing platforms, scaling according to demand.

You might be inclined to ask: How is this different from micro-services?

Well, the idea behind micro-services was to break down one monolithic application into smaller components which you can better manage and scale. This is exactly what FaaS offers but as an even smaller abstraction, allowing event-based operations which don't require a lot of business logic to be easily managed.

What is Lambda?

After Amazon's announcement of AWS Lambda in November 2014, serverless computing has become one of the hottest topics within the public cloud market because it allows users to execute small computing tasks previously abstracted as micro-services.

The benefits of using Lambda include:

- Automated deployments allowing you to create a continuous delivery pipeline

- Scalable and highly available computing capacity

- Managed and maintenance-free service

After uploading your source code, you can execute your functions by calling the REST API or using one of these integrations: Kinesis, S3, DynamoDB, CloudTrail, and API Gateway. Lambda supports Node.js, Java, Python, and .NET Core.

What is SQS?

When building scalable and highly available systems, it's a best practice to decouple your services by using either:

- ELB (Load Balancer) for synchronous decoupling for web applications dealing with requests, or

- any type of queue — in this case SQS for asynchronous decoupling

AWS offers SQS as a fault-tolerant scalable distributed message queue system. A typical scenario would be:

- A producer sends messages to an SQS queue

- Then, a group of consumers reads messages from SQS and executes tasks

Frameworks and Providers

Because of its ease of use, serverless computing has been implemented in a number of ways, ranging from all-in-one solutions required to develop cloud applications, like Firebase and Backendless, to hosting and code execution in the cloud, most notably: Amazon Lambda, Google Cloud Functions, and Azure Functions.

For more details and tools, I suggest reading the awesome serverless list.

Implementation

Integrate S3, Lambda, and SQS

We will create a service which will be triggered by events and run our functions. As a data source we'll add an S3 bucket that triggers a scan event.

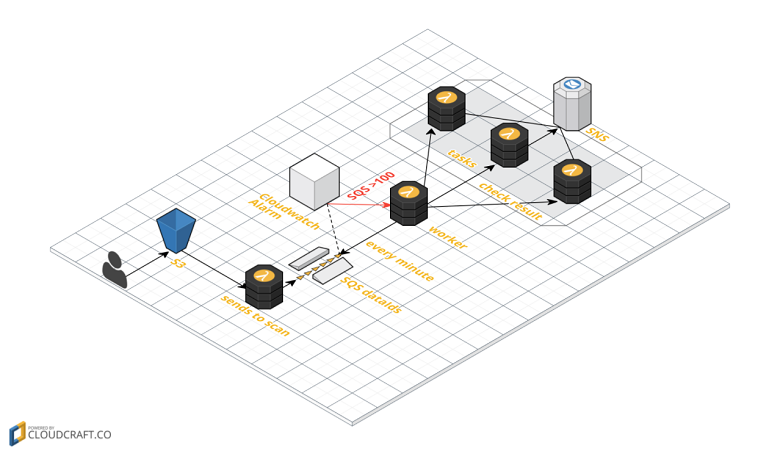

Click diagram to expand

Steps:

- The S3 bucket emits an ObjectCreated event, which will trigger the Scan lambda function and add a data_id message into the queue

- SQS receives and stores the message

- The CloudWatch Event Rule triggers the Lambda worker whenever the number of messages reaches the 100 threshold; it's also run every minute

- The Lambda Consumer reads as many messages as possible from the SQS and executes a Lambda task for each message

- The Lambda task performs the actual check and publishes a message to SNS, then deletes the task from the SQS

- SNS sends out a notification to all subscribers

Let's dive a bit deeper into the implementation and set up this infrastructure using serverless computing.

Look over the serverless quick start guide to ensure you have the basic setup.

Setup: MetaDefender Cloud API with Serverless Computing

S3 Bucket

The serverless architecture will create a bucket with the predefined name when called. This snippet from serverless.yml contains:

- IAM Role specifying bucket permissions

- Usage in the required lambda function

SQS Queues

We need to define permissions for the current role for our new SQS and also specify it at a resource:

resources:

Resources:

BacklogQueue:

Type: AWS::SQS::Queue

Properties:

QueueName: ${self:custom.sqs}

MessageRetentionPeriod: '1209600'

VisibilityTimeout: '60'

RedrivePolicy:

deadLetterTargetArn:

Fn::GetAtt:

- DeadLetterQueue

- Arn

maxReceiveCount: '10'

The CloudWatch alarm, which is attached to the queue, is set up to trigger our worker when the threshold is reached.

We also have a dead-letter queue to handle cases when messages can't be successfully processed:

DeadLetterQueue:

Type: AWS::SQS::Queue

Properties:

QueueName: ${self:custom.sqs}-dead-letter-queue

MessageRetentionPeriod: '1209600'

Scan Handler

Our scan handler definition contains the S3 trigger event definition and environment variables. As for implementation, it:

- reads the S3 object

- uploads it to the MetaDefender Cloud API

- forwards the returned data_id to the queue

MetaDefender Cloud APIs use user-spec API keys as a form of authentication. In order to obtain this API key, you must register for a free OPSWAT account and then view the key on your account page.

Worker

The worker definition specifies events to which it responds, as well as environment variables.

worker:

handler: worker.handler

name: worker

environment:

sqs: ${self:custom.sqs}

lambda: task

events:

- schedule: rate(1 minute)

- sns: ${self:custom.sns}

The worker function needs to read as many messages as possible from the queue and handle each item by invoking the task function.

Task

Our Task function will consume each event sent by the worker and query the MetaDefender API for the scan report based on the received data_id.

When our scan is complete, it will parse and format the output:

if (res && res.scan_results && res.scan_results.progress_percentage === 100) {

response.data = JSON.stringify(res, null, 2);

return sns.createTopic({ Name: config.snsEmail }, sendEmail).promise();

}

Finally, it will trigger our SNS notification, which is defined to send an email to predefined subscribers:

MailQueue:

Type: AWS::SNS::Topic

Properties:

DisplayName: "Serverless Mail"

TopicName: ${self:custom.snsEmail}

Subscription:

- Endpoint: ${self:custom.mailTo}

Protocol: "email"

Summary

Using AWS Lambda with any of the predefined AWS services, as events, is no longer a tedious task. Serverless architecture can define in a few rows infrastructure previously implemented in many CloudFormation scripts.

Doing so allows you to build services for consuming tasks from a queue, sending out massive amounts of emails, scanning files after upload, or analyzing user behavior. This also allows you to integrate the MetaDefender Cloud API into serverless architecture.

You can find the example code on Github: vladoros/mdcloud-serverless.