What is AI Hacking?

AI hacking is the use of artificial intelligence to enhance or automate cyberattacks. It allows threat actors to generate code, analyze systems, and evade defenses with minimal manual effort.

AI models, especially large language models (LLMs), make attack development faster, cheaper, and more accessible to less experienced hackers. The result is a new set of AI attacks which is faster, more scalable, and often harder to stop with traditional defenses.

What is an AI Hacker?

An AI hacker is a threat actor who uses artificial intelligence to automate, enhance, or scale cyberattacks. AI hackers use machine learning models, generative AI, and autonomous agents to bypass security controls, craft highly convincing phishing attacks, and exploit software vulnerabilities at scale.

These attackers can include both human operators using AI tools and semi-autonomous systems that execute tasks with minimal human input. Human attackers using AI are real-world individuals—criminals, script kiddies, hacktivists, or nation-state actors—who use AI models to boost their attack capabilities.

Autonomous hacking agents are AI-driven workflows that can chain tasks—such as reconnaissance, payload generation, and evasion—with minimal oversight. While still guided by human-defined goals, these agents can operate semi-independently once launched, executing multistep attacks more efficiently than manual tools.

How Is AI Used for Cybercrime?

AI threat actors now use artificial intelligence to launch faster, smarter, and more adaptive cyberattacks. From malware creation to phishing automation, AI is transforming cybercrime into a scalable operation. It can now generate malicious code, write persuasive phishing content, and even guide attackers through full attack chains.

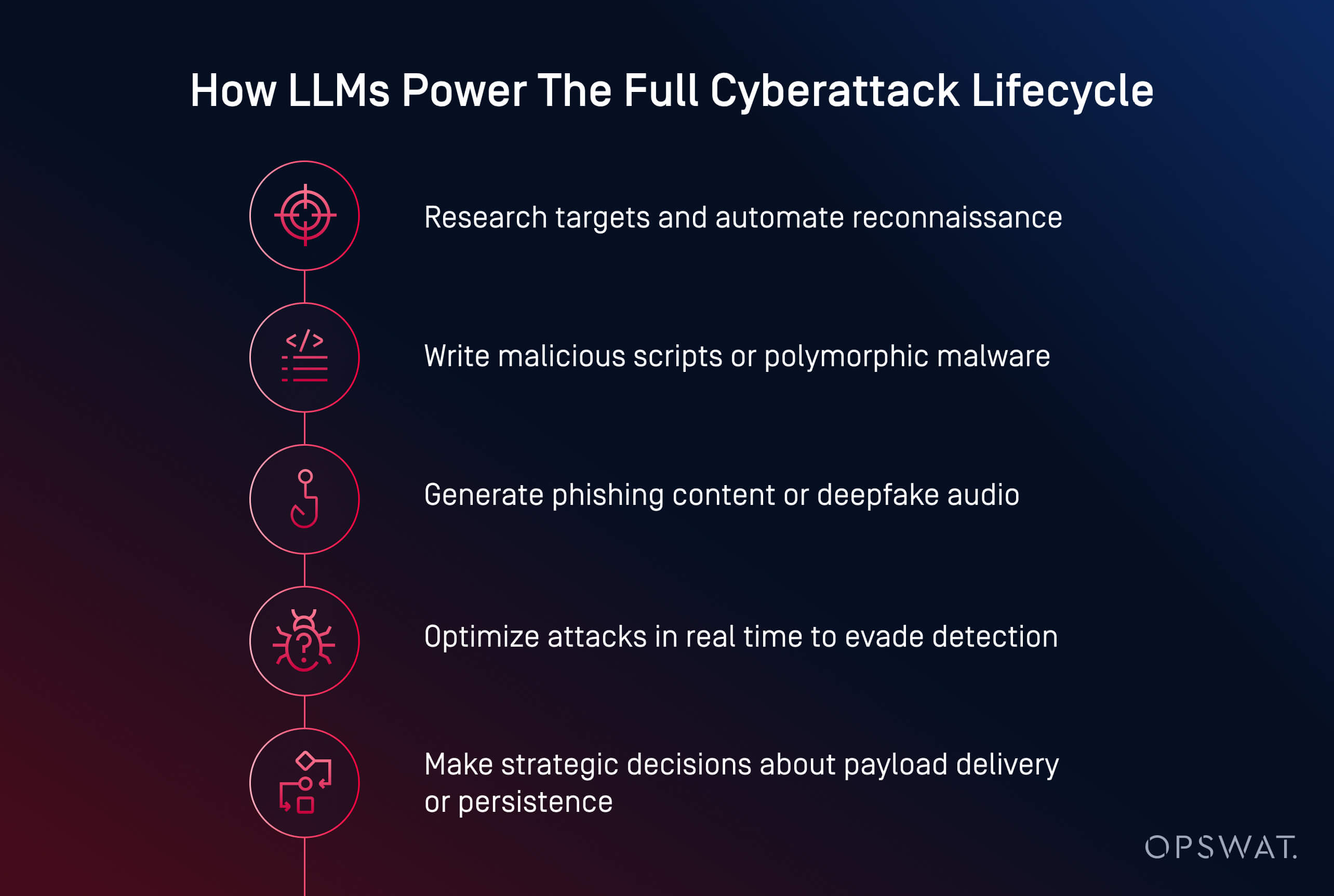

Cybercriminals use AI in several core areas:

- Payload generation: Tools like HackerGPT and WormGPT can write obfuscated malware, automate evasion tactics, and convert scripts into executables. These are examples of generative AI attacks, often seen in AI agent cyberattacks where models make autonomous decisions.

- Social engineering: AI creates realistic phishing emails, clones voices, and generates deepfakes to manipulate victims more effectively.

- Reconnaissance and planning: AI speeds up target research, infrastructure mapping, and vulnerability identification.

- Automation at scale: Attackers use AI to launch multistage campaigns with minimal human input.

According to the Ponemon Institute, AI has already been used in ransomware campaigns and phishing attacks that caused major operational disruptions, including credential theft and the forced shutdown of over 300 retail locations during a 2023 breach.

Several emerging patterns are reshaping the threat landscape:

- Democratization of cyberattacks: Open-source models like LLaMA, fine-tuned for offense, are now available to anyone with local computing power

- Lower barrier to entry: What once required expert skills can now be done with simple prompts and a few clicks

- Increased evasion success: AI-generated malware is better at hiding from static detection, sandboxes, and even dynamic analysis

- Malware-as-a-Service (MaaS) models: Cybercriminals are bundling AI capabilities into subscription-based kits, making it easier to launch complex attacks at scale

- Use of AI in real-world breaches: The Ponemon Institute reports that attackers have already used AI to automate ransomware targeting, including in incidents that forced shutdowns of major operations

AI-Enabled Phishing and Social Engineering

AI-enabled phishing and social engineering with AI are transforming traditional scams into scalable, personalized attacks that are harder to detect. Threat actors now use generative models to craft believable emails, clone voices, and even produce fake video calls to manipulate their targets.

Unlike traditional scams, AI-generated phishing emails are polished and convincing. Tools like ChatGPT and WormGPT produce messages that mimic internal communications, customer service outreach, or HR updates. When paired with breached data, these emails become personalized and more likely to succeed.

AI also powers newer forms of social engineering:

- Voice cloning attacks mimic executives using short audio samples to trigger urgent actions like wire transfers

- Deepfake attacks simulate video calls or remote meetings for high-stakes scams

In a recent incident, attackers used AI-generated emails during a benefits enrollment period, posing as HR to steal credentials and gain access to employee records. The danger lies in the illusion of trust. When an email looks internal, the voice sounds familiar, and the request feels urgent, even trained staff can be deceived.

AI Vulnerability Discovery and Exploitation

AI is accelerating how attackers find and exploit software vulnerabilities. What once took days of manual probing can now be done in minutes using machine learning models trained for reconnaissance and exploit generation.

Threat actors use AI to automate vulnerability scanning across public-facing systems, identifying weak configurations, outdated software, or unpatched CVEs. Unlike traditional tools, AI can assess exposure context to help attackers prioritize high-value targets.

Common AI-assisted exploitation tactics include:

- Automated fuzzing to uncover zero-day vulnerabilities faster

- Custom script generation for remote code execution or lateral movement

- Password cracking and brute force attacks optimized through pattern learning and probabilistic models

- Reconnaissance bots that scan networks for high-risk assets with minimal noise

Generative models like LLaMA, Mistral, or Gemma can be fine-tuned to generate tailored payloads, such as shellcode or injection attacks, based on system-specific traits, often bypassing safeguards built into commercial models.

The trend is clear: AI enables attackers to discover and act on vulnerabilities at machine speed. According to the Ponemon Institute, 54% of cybersecurity professionals rank unpatched vulnerabilities as their top concern in the age of AI-powered attacks.

AI-Driven Malware and Ransomware

The rise of AI-generated malware means traditional defenses alone are no longer enough. Attackers now have tools that think, adapt, and evade—often faster than human defenders can respond.

AI-enabled ransomware and polymorphic malware are redefining how cyberattacks evolve. Instead of writing static payloads, attackers now use AI to generate polymorphic malware—code that constantly changes to avoid detection.

Ransomware threats are also evolving. AI can help choose which files to encrypt, analyze system value, and determine optimal timing for detonation. These models can also automate geofencing, sandbox evasion, and in-memory execution—techniques typically used by advanced threat actors.

Data exfiltration with AI adapts dynamically to evade detection. Algorithms can compress, encrypt, and stealthily extract data by analyzing traffic patterns, avoiding detection triggers. Some malware agents are beginning to make strategic decisions: choosing when, where, and how to exfiltrate based on what they observe inside the compromised environment.

Examples of AI Cyberattacks

AI cyberattacks are no longer theoretical; they’ve already been used to steal data, bypass defenses, and impersonate humans at scale.

The examples in the three notable categories below show that attackers no longer need advanced skills to cause serious damage in cyberattacks. AI lowers the barrier to entry while increasing attack speed, scale, and stealth. Defenders must adapt by proactively testing AI-integrated systems and deploying safeguards that account for both technical manipulation and human deception.

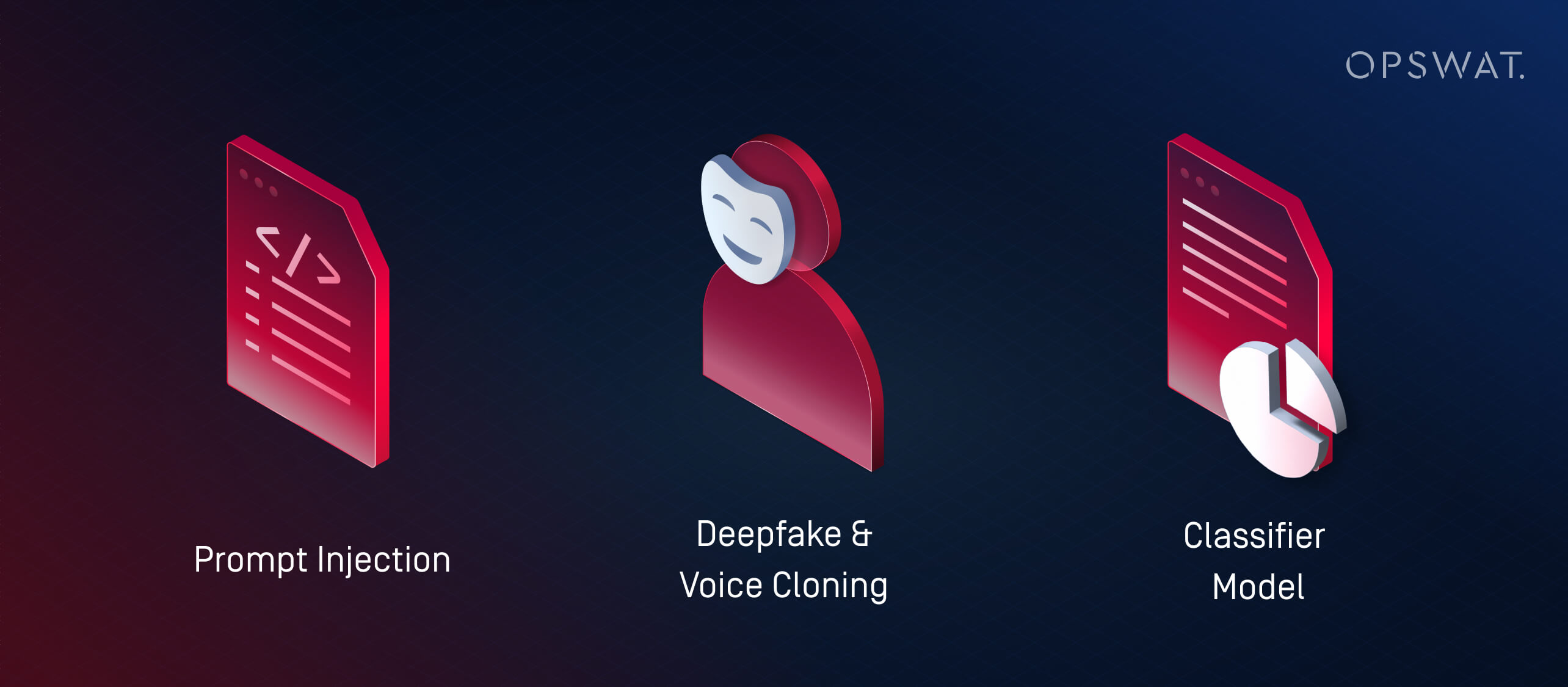

1. What are Prompt Injection Attacks?

Prompt injection is a technique where attackers exploit vulnerabilities in LLMs (large language models) by feeding them specially crafted inputs designed to override intended behavior. These attacks exploit the way LLMs interpret and prioritize instructions, often with little or no traditional malware involved.

Rather than using AI to launch external attacks, prompt injection turns an organization’s own AI systems into unwitting agents of compromise. If an LLM is embedded in tools like help desks, chatbots, or document processors without proper safeguards, an attacker can embed hidden commands that the model then interprets and acts upon. This can result in:

- Leaking private or restricted data

- Executing unintended actions (e.g., sending emails, altering records)

- Manipulating output to spread misinformation or trigger follow-on actions

These risks escalate in systems where multiple models pass information between each other. For example, a malicious prompt embedded in a document could influence an AI summarizer, which then passes flawed insights to downstream systems.

As AI becomes more integrated into business workflows, attackers increasingly target these models to compromise trust, extract data, or manipulate decisions from the inside out.

2. What are Deepfake and Voice Cloning Attacks?

Deepfake attacks use AI-generated audio or video to impersonate people in real time. Paired with social engineering tactics, these tools are being used for fraud, credential theft, and unauthorized system access.

Voice cloning has become especially dangerous. With just a few seconds of recorded speech, threat actors can generate audio that mimics tone, pacing, and inflection. These cloned voices are then used to:

- Impersonate executives or managers during urgent calls

- Trick employees into wire transfers or password resets

- Bypass voice authentication systems

Deepfakes take this one step further by generating synthetic videos. Threat actors can simulate a CEO on a video call requesting confidential data—or appear in recorded clips “announcing” policy changes that spread disinformation. The Ponemon Institute reported an incident where attackers used AI-generated messages impersonating HR during a company’s benefits enrollment period which has led to credential theft.

As AI tools become more accessible, even small-time threat actors can generate high-fidelity impersonations. These attacks bypass traditional spam filters or endpoint defenses by exploiting trust.

3. What are Classifier Model Attacks (Adversarial Machine Learning)?

Classifier model attacks manipulate the inputs or behavior of an AI system to force incorrect decisions without necessarily writing malware or exploiting code. These tactics fall under the broader category of adversarial machine learning.

There are two primary strategies:

- Evasion attacks: The attacker crafts an input that fools a classifier into misidentifying it, such as malware that mimics harmless files to bypass antivirus engines

- Poisoning attacks: A model is trained or fine-tuned on deliberately skewed data, altering its ability to detect threats

Supervised models often "overfit": they learn specific patterns from training data and miss attacks that deviate just slightly. Attackers exploit this by using tools like WormGPT (an open-source LLM fine-tuned for offensive tasks) to create payloads just outside known detection boundaries.

This is the machine-learning version of a zero-day exploit.

AI Hacking vs. Traditional Hacking: Key Differences

AI hackers use AI to automate, enhance, and scale AI cyberattacks. In contrast, traditional hacking often requires manual scripting, deep technical expertise, and significant time investment. The fundamental difference lies in speed, scalability, and accessibility: even novice attackers can now launch sophisticated AI-powered cyberattacks with a few prompts and a consumer-grade GPU.

Aspect | AI Hacking | Traditional Hacking |

|---|---|---|

Speed | Near-instant with automation | Slower, manual scripting |

Skill Requirements | Prompt-based; low barrier to entry, but requires model access and tuning | High; requires deep technical expertise |

Scalability | High — supports multistage attacks across many targets | Limited by human time and effort |

Adaptability | Dynamic — AI adjusts payloads and evasion in real time | Static or semi-adaptable scripts |

Attack Vectors | LLMs, deepfakes, classifier model attacks, autonomous agents | Malware, phishing, manual recon and exploits |

Caveats | Can be unpredictable; lacks intent and context without human oversight | More strategic control, but slower and manual |

AI Misuse and Adversarial Machine Learning

Not all AI threats involve generating malware—some target the AI systems themselves. Adversarial machine learning tactics like prompt injection and model poisoning can manipulate classifiers, bypass detection, or corrupt decision-making. As AI becomes central to security workflows, these attacks highlight the urgent need for robust testing and human oversight.

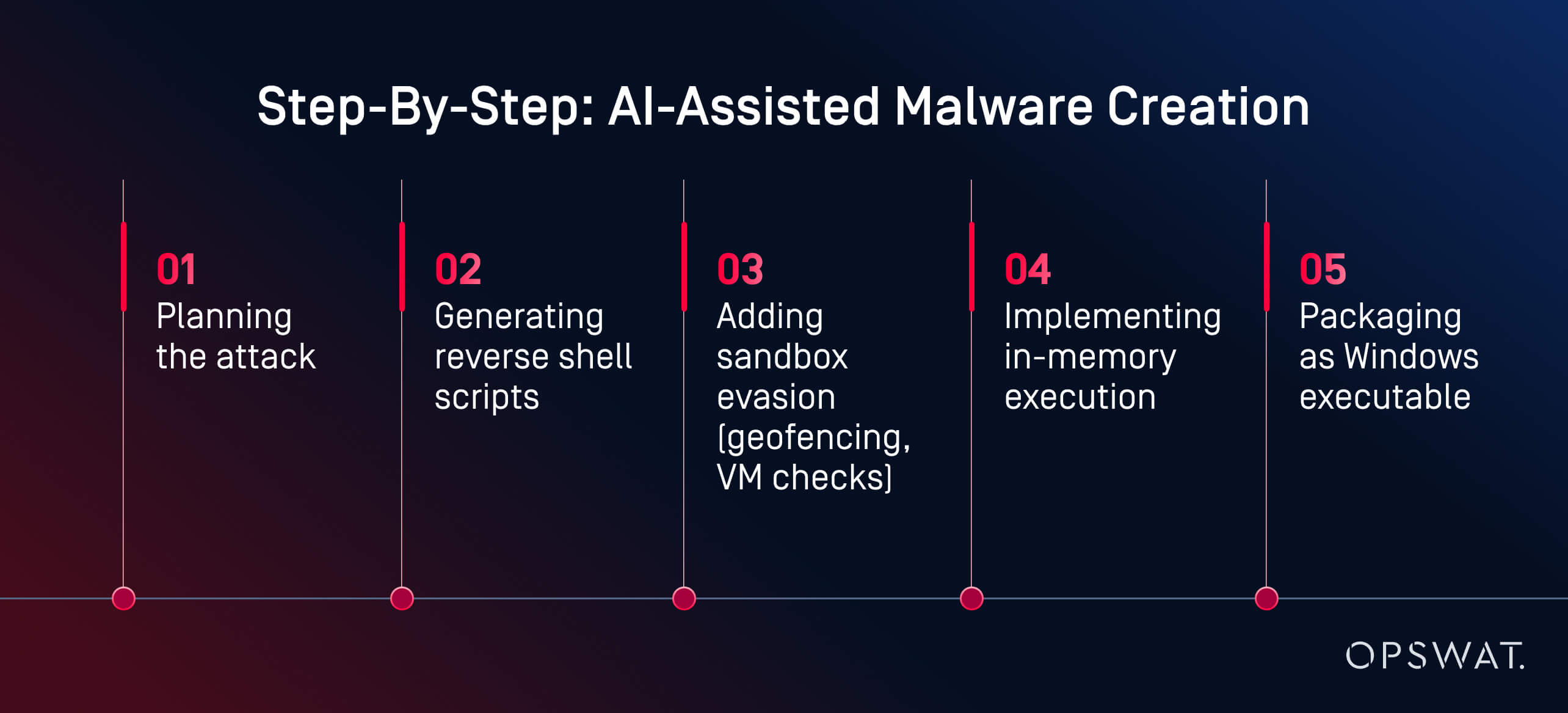

How AI Can Be Misused to Develop Malware: An OPSWAT Expert Analysis

What used to require advanced skills now takes prompts and a few clicks.

In a live experiment, OPSWAT cybersecurity expert Martin Kallas demonstrated how AI tools can be used to create evasive malware quickly, cheaply, and without advanced skills. Using HackerGPT, Martin built a full malware chain in under two hours. The model guided him through every phase:

“The AI guided me through every phase: planning, obfuscation, evasion, and execution,” explained Martin. The result was “... an AI-generated payload that evaded detection by 60 out of 63 antivirus engines on VirusTotal.” Behavioral analysis and sandboxing also failed to flag it as malicious.

This wasn’t a nation-state. This wasn’t a black hat hacker. It was a motivated analyst using publicly available tools on a consumer-grade GPU. The key enabler? Locally hosted, unrestricted AI models. With over a million open-source models available on platforms like Hugging Face, attackers can choose from a vast library, fine-tune them for malicious purposes, and run them without oversight. Unlike cloud-based services, local LLMs can be reprogrammed to ignore safeguards and execute offensive tasks.

“This is what a motivated amateur can build. Imagine what a nation-state could do,” warned Martin. His example shows how AI misuse can transform cybercrime from a skilled profession into an accessible, AI-assisted workflow. Today, malware creation is no longer a bottleneck. Detection must evolve faster than the tools attackers now have at their fingertips.

How to Defend Against AI-Powered Cyberattacks

We need layered defense—not just smarter detection.

Defending systems against AI-powered cyberattacks requires a multi-layered strategy that combines automation with human insight, and prevention with detection. Leading AI-powered cybersecurity solutions like OPSWAT’s advanced threat prevention platform integrate prevention and detection. Watch video to learn more.

AI Security Testing and Red Teaming

Many organizations are turning to AI-assisted red teaming to test their defenses against LLM misuse, prompt injection, and classifier evasion. These simulations help uncover vulnerabilities in AI-integrated systems before attackers do.

Red teams use adversarial prompts, synthetic phishing, and AI-generated payloads to evaluate whether systems are resilient or exploitable. AI security testing also includes:

- Fuzzing models for prompt injection risks

- Evaluating how LLMs handle manipulated or chained inputs

- Testing classifier robustness to evasive behavior

Security leaders are also starting to scan their own LLMs for prompt leakage, hallucinations, or unintended access to internal logic, which is a crucial step as generative AI is embedded into products and workflows.

A Smarter Defense Stack: OPSWAT’s Approach

Instead of trying to “fight AI with AI” in a reactive loop, Martin Kallas argues for a multi-layered, proactive defense. OPSWAT solutions combine technologies that target the full threat lifecycle:

- Metascan™ Multiscanning: Runs files through multiple antivirus engines to detect threats missed by single-AV engine systems

- MetaDefender Sandbox™: Analyzes file behavior in isolated environments, even detecting AI-generated payloads that evade static rules

- Deep CDR™: Neutralizes threats by rebuilding files into safe versions, stripping out embedded exploits

This layered security stack is designed to counter the unpredictable nature of AI-generated malware, including polymorphic threats, in-memory payloads, and deepfake lures that bypass traditional tools. Because each layer targets a different stage of the attack chain, it only takes one to detect or neutralize the threat and collapse the entire operation before it can do damage.

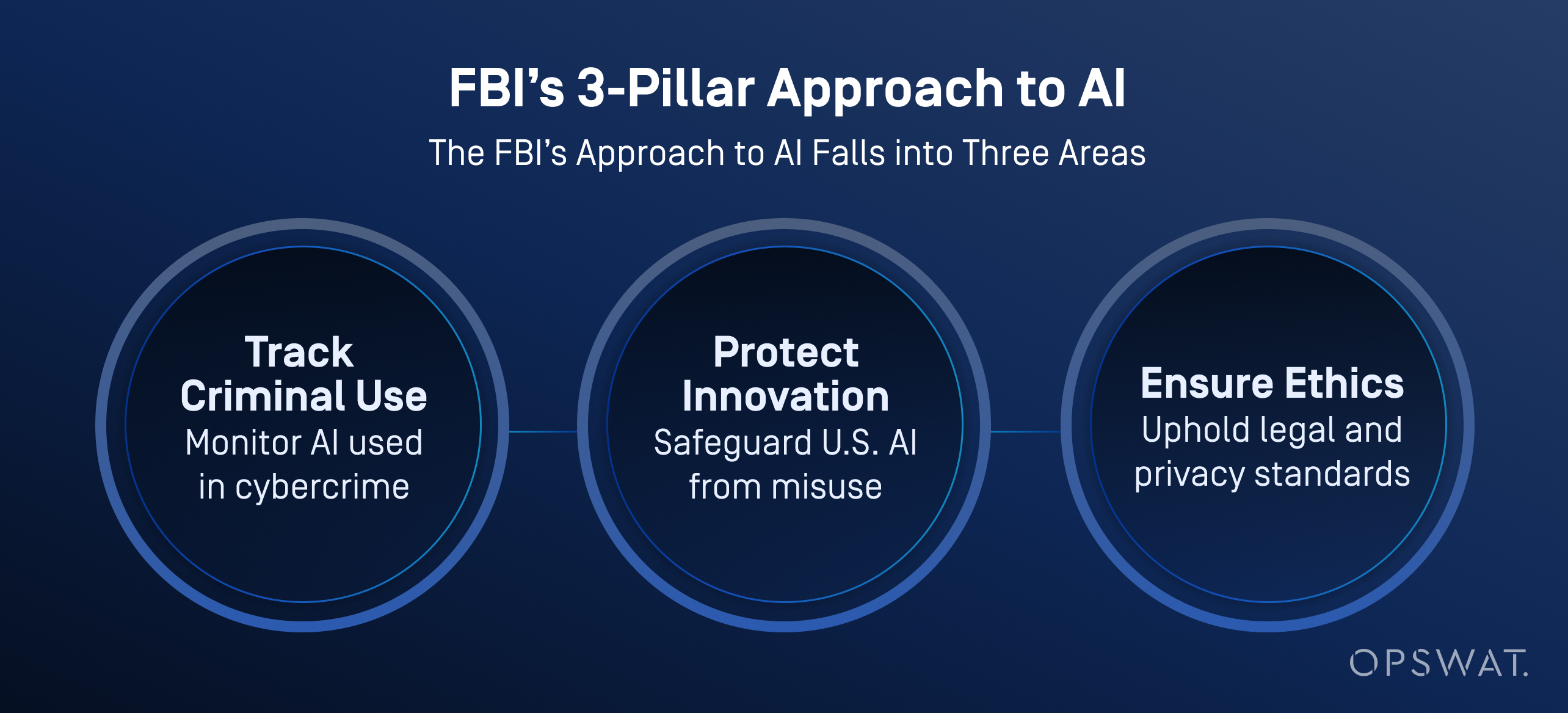

Industry Use Cases: What AI Does the FBI Use?

The FBI and other U.S. agencies are increasingly integrating artificial intelligence into their cyber defense operations not only to triage and prioritize threats, but also to enhance data analysis, video analytics, and voice recognition.

According to the FBI, AI helps process large volumes of data to generate investigative leads, including vehicle recognition, language identification, and speech-to-text conversion. Importantly, the bureau enforces strict human oversight: all AI-generated outputs must be verified by trained investigators before any action is taken.

AI is not a replacement for human decision-making. The FBI stresses that a human is always accountable for investigative outcomes, and that AI must be used in a way that respects privacy, civil liberties, and legal standards.

Are Organizations Ready?

Despite the rise in AI-driven threats, only 37% of security professionals say they feel prepared to stop an AI-powered cyberattack, as stated in Ponemon’s State of AI Cybersecurity Report. Many still rely on outdated cyber risk management plans and reactive detection strategies.

The path forward requires 4 essential steps:

- Faster adoption of AI-aware defense tools

- Continuous red teaming and prompt risk testing

- Active use of Multiscanning, CDR, and sandboxing

- Training SOC analysts to work alongside automated investigation tools

AI has changed the rules. It’s not about replacing humans but giving them the tools to outpace attacks that now think for themselves. Explore how our platform helps secure files, devices, and data flows across IT and OT environments.

Frequently Asked Questions (FAQs)

What is AI hacking?

AI hacking is the use of tools like large language models (LLMs) to automate or enhance cyberattacks. It helps attackers create malware, launch phishing campaigns, and bypass defenses faster and more easily than before.

How is AI used for cybercrime?

Criminals use AI to generate malware, automate phishing, and speed up vulnerability scans. Some tools like those sold on dark web forums can even walk attackers through full attack chains.

What’s the difference between AI hacking and traditional hacking?

Traditional hacking requires hands-on coding, tool usage, and technical skills. AI hacking reduces this barrier with models that generate payloads, scripts, and phishing content from simple prompts. These techniques make attacks faster, more scalable, and accessible to amateurs.

How can AI be misused to develop malware?

AI can generate polymorphic malware, reverse shells, and sandbox-evasive payloads with little input. As shown in a live OPSWAT demo, a non-malicious user created near zero-day malware in under two hours using open-source AI models running on a gaming PC.

Can AI replace hackers?

Not yet, but it can handle many technical tasks. Hackers still choose targets and goals, while AI writes code, evades detection, and adapts attacks. The real risk is human attackers amplified by AI speed.

What is an AI hacker?

An AI hacker is a person or a semi-autonomous system that uses AI to launch cyberattacks, automate malware, or run phishing campaigns at scale.

What AI does the FBI use?

The FBI uses AI for threat triage, forensic analysis, anomaly detection, and digital evidence processing. These tools help automate investigations and surface high-priority cases while reducing analyst workload.

What is an example of an AI attack?

One real-world case involved AI-generated phishing emails during a company’s benefits enrollment period. The attackers impersonated HR to steal credentials. In another, AI helped automate ransomware targeting, forcing a multinational brand to shut down operations.

What are AI cyberattacks?

AI cyberattacks are threats developed or executed with the help of artificial intelligence. Examples include AI-generated phishing, deepfake impersonations, prompt injection, and classifier evasion. These attacks often bypass traditional defenses.

How do hackers and scammers use AI to target people?

Hackers use AI to personalize phishing emails, clone voices for phone scams, and generate deepfake videos to deceive targets. These tactics exploit human trust and make even well-trained users vulnerable to realistic, AI-crafted deception.

How can I defend against AI-powered attacks?

Use a layered defense: Multiscanning, sandboxing, CDR, and human-led red teaming. AI attacks move fast. Defenders need automation, context, and speed to keep up.