MetaDefender Core uses multiple virus and malware detection engines and aggregates their findings to identify potential threats. There are other ways to detect potential threats, and one approach is to create a virtual environment, or 'sandbox', for the file where it can be observed to see if it exhibits any threatening behavior. These environments are usually virtual machines that have specific combinations of virtualized hardware, operating system, and installed applications and are running on different virtualization technologies.

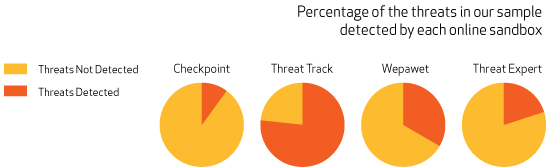

There are multiple public online sandboxing tools that are free to use, and we selected four (ThreatTrack, Check Point, ThreatExpert, and Wepawet) to compare their dynamic scanning of file behavior against MetaDefender Cloud's static file scanning. We selected 30 known files with malware, disguised as or embedded in document files, and submitted each to MetaDefender Cloud and online sandboxes. The results varied between the files and are summarized below. Note that each of these sandboxes support scanning only specific types of files, and some of our samples were file types that are not supported by some of the sandboxes.

All of the files were detected by MetaDefender Cloud, although the number of engines detecting it varied greatly. The fewest number of engines (out of 43) detecting a threat was 10, and the highest was 30. Each file had a different set of engines that detected it as a threat.

When we tested the same files in the public sandboxes we also saw a lot of variation between the different sandboxes in their detection rates. One of them only detected 3 of the 30 threats and another detected 23 of the 30 threats. Depending on the file tested, different sandboxes were able to detect the threat.

One of the specific threats we tested exploited CVE-2012-0158, which is a vulnerability in some Microsoft products. When the document is opened a Trojan virus is installed in the background and another harmless document is displayed to the user. For this specific threat, none of the online sandboxes reported the threat. Although we do not know exactly how the files are handled (revealing this would be a potential security hole for the sandbox providers) several reasons why the sandbox environments may not have detected the threat could be that the vulnerability only existed in specific Office application versions or patch levels. If a piece of malware targets a specific vulnerability that is only found in certain versions of operating systems or applications then a sandbox application will not detect the threat unless the environment contains that operating system or application version.

Sandboxing's strength is in testing for targeted threats that are aimed at specific user or corporate environments. By focusing on a specific combination of operating system, hardware, and installed applications a sandbox can allow testing of a number of different potential threats in that environment. Any threats that are not targeted at that specific environment though may not be detected by the sandbox. Another file we tested exploited the vulnerability CVE-2009-1862, which affects certain versions of Adobe Acrobat and Acrobat Reader (9.0 to 9.1.2) and Adobe Flash Player (various subversions of 9 and 10). Several sandboxes detected this threat but others didn't. Again, since this vulnerability exists only on specific versions of Adobe Acrobat, Reader, and Flash Player it is likely that the sandboxes that found the threat had integrated those versions of the Adobe products in their environment and others didn't.

What this shows is that sandboxing technology is similar to other types of virus and malware detection technology. No single engine or sandbox is going to detect all potential threats, so the best way to increase detection rates is to use multiple engines or sandboxes, each of which will detect a different set of threats. Taking the idea one step further, using multiple detection techniques, such as both multiscanning and sandboxing, will also increase overall malware and virus detection rates.

Expert Guide: What is Sandboxing? An In-Depth Exploration of Sandbox Security

Below are the specific multi-scanning vs. sandboxing results for the 30 threats we tested. If you are interested in getting these samples, please email us.

| Threat | MetaDefender Cloud % of engines that detected threat | Online Sandboxes % of sandboxes that detected threat | |

| JS_CRYPTED.SM1 | 58 | 75 | |

| TROJ_PIDIEF.SMZB | 63 | 75 | |

| Exploit-PDF.q.gen!stream | 63 | 50 | |

| Exploit.PDF.Jsc.EH | 60 | 50 | |

| PDF/Obfusc.H!Camelot | 65 | 50 | |

| Backdoor.BackOrifice.A2 | 40 | 50 | |

| Exploit.JS.Pdfka!E2 | 67 | 50 | |

| JS/Crypt.AAAI!tr | 65 | 50 | |

| Exploit.BT | 65 | 50 | |

| Trojan.JS.QAI | 65 | 50 | |

| Exploit_c.RQA | 70 | 75 | |

| W32/Bagle.BC@mm | 44 | 0 | |

| W32/Bagle.BC@mm | 51 | 25 | |

| Trivial.42.H | 33 | 50 | |

| Backdoor/Win32.BO.gen. | 44 | 25 | |

| Backdoor.BackOrifice.A2 | 44 | 50 | |

| BackOrifice | 51 | 25 | |

| BackOrifice.Trojan | 44 | 0 | |

| W32/Bagle.AP@mm | 51 | 50 | |

| Exploit-CVE2012-0158.f!rtf trojan | 40 | 0 | |

| Exploit/MSWord.CVE-2012-0158 | 30 | 25 | |

| TROJ_ARTIEF.SDF | 35 | 25 | |

| Trojan.Win32.A.EX-CVE-2012-0158.63685 | 26 | 25 | |

| TR/Dropper.Gen | 49 | 25 | |

| Worm.Mydoom-27 | 51 | 0 | |

| Backdoor.BackOrifice.Melt | 23 | 0 | |

| Win32.Worm.Sasser.B | 51 | 50 | |

| Worm.Sasser.C | 51 | 0 | |

| W32/AgoBot.A!worm | 44 | 50 | |

| Swedish_Boys.459.A | 35 | 0 | |