Author: Vinh T. Nguyen - Team Leader

Introduction

A vulnerability in a computer system is a weakness that an attacker can exploit to use that system in an unauthorized way [1]. Software vulnerability, in particular, is a cyber-security challenge that organizations must deal with on a regular basis, due to the ever-changing nature and complexity of software.

The most common methods organizations use to assess their risk to vulnerabilities in their computer networks can be grouped into two major categories: network-based and host-based [2]. Network-based methods probe the network without logging in to each host, to detect vulnerable services, devices and data in transit. Host-based methods log in to each host and gather a list of vulnerable software, components and configurations. Each category handles risk in different ways, yet neither can detect vulnerabilities unless vulnerable software is already deployed on a host.

In practice, assessments are typically scheduled so that they do not affect normal operations. This leaves a window of opportunity between the time the vulnerable software is deployed and when an assessment begins that attackers can use to compromise a host and, thus, a network.

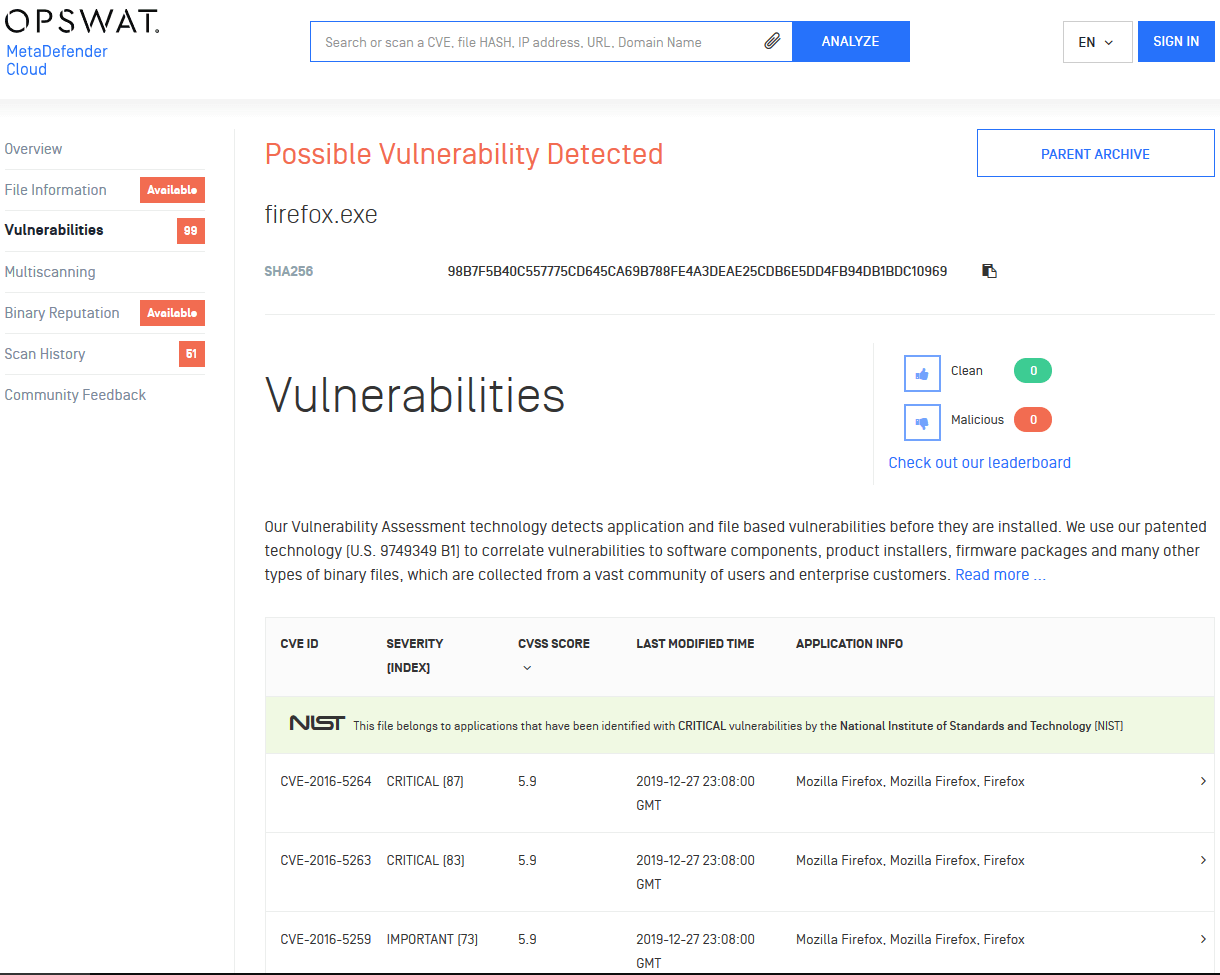

This situation requires a scanning method that can close that window and reduce overall vulnerability risk. OPSWAT file-based vulnerability assessment technology does that by associating binary files (such as installer, executable, dynamic library, etc.) with reported vulnerabilities. This method can detect vulnerable software components before they are deployed, so that security analysts and sysadmins can quickly take appropriate action, thus closing the window.

In the following sections, we explain the technology and its use cases in more detail and give examples of known attacks that are related to these use cases. Then we show an exploitation demo and conclude about the technology and its potential.

Why Another Detection Method is Needed

Limitations of Traditional Methods

Traditional methods of detecting software vulnerabilities operate at an abstract level. When network-based scanning probes a computer network and host-based scanning collects data from a machine, they usually determine what checks to run based on the system's environment, to ensure high performance (scan time, network bandwidth, memory usage, etc.) and to filter out irrelevant results. Sometimes, assessment methods rely on a system's environment for information so much that they do not perform appropriate checks, due to idiosyncrasies in the same environment they rely on.

For example, if a vulnerable network service is shut down before an assessment (accidentally or intentionally), the scan will not detect any vulnerability in the service. Also, deleting the Windows registry key that contains the installation path to an application may leave the application itself and its vulnerabilities intact and undetected by host-based scanning. In both cases, the scanning methods are less effective because they rely on the footprint of the installed product.

Installed product is a collection of files (such as executable, library, database, etc.) packaged together and combined with deployment logic. The deployment logic usually follows a convention for writing the product’s footprint to a location (for example, the Registry on Windows OS, port 3306 for MySQL). This footprint does not define the product itself and can be changed anytime during the product’s lifetime. Therefore, relying on the footprint (as both network- and host-based scanning do) to detect the product and its vulnerability has the risk of mis-detection.

Introducing File-Based Scanning

File-based vulnerability assessment is different from traditional assessment methods. As its name implies, it operates on a file-by-file basis and ignores all high-level product abstractions. By analyzing each reported vulnerability and mapping them to product installers and main component files (patent U.S. 9749349 B1), file-based vulnerability assessment can detect whether a binary file is associated with a vulnerability, thus exposing vulnerabilities even when the product is not running or its footprint has been modified.

While the difference between file-based and network-based scanning is clear, the difference between file-based and host-based scanning it is not so clear. It could be argued that host-based scanning does not rely completely on product footprint, that it also checks the file version of the product’s main component(s). So, the scanning logic can be modified to only do that file version check, thus rendering file-based scanning a subset of host-based scanning.

This is not the case, since:

- Host-based scanning is often hardwired to use both the system environment and the product's footprint to filter what checks to make

- By focusing on finding and analyzing the files that cause vulnerabilities, file-based scanning can detect some vulnerabilities that are hard to detect with the host-based method and is suitable for more use cases

File-based scanning can be used to detect vulnerable installers, firmware packages, library files, product component files, etc. passing into and out of networks through gateways or going to and from endpoints (via email, flash drives, etc.). This enables sysadmins and users to check for a product’s vulnerability before using it and ensure protection when the host is not supported by the host-based or network-based scanning methods the organization currently uses.

File-based vulnerability assessment technology can also be used to discover potential vulnerabilities in existing machines. Since traditional scanning methods usually perform vulnerability checks based on high-level, vague reports in public disclosure sources, the scan itself often stops at a high-level (product's footprint) and does not go into the details of either the vulnerability or the reported product.

However, since products often reuse each other's components (dynamic libraries and shared services are some examples), updating a vulnerable product or even getting rid of it does not always guarantee that the files causing the vulnerability are gone. They may still lie somewhere in the file system and provide a platform for attackers to reconnect those components and wreak havoc. These files often have all the integrity one can ask for. They have clear purpose, are widely known, come from trusted sources, have valid signatures and still exist in some of the latest software packages. File-based vulnerability assessment technology enables sysadmins to scan the machines and find lurking vulnerable files before attackers have the chance to use them.

Challenges with the Technology

Still, operating at the file level has its limitations. It may mark a file as vulnerable when the vulnerability requires multiple files to be loaded together to be triggered (false positive). This is in part due to missing the context (by scanning a single file) and the vagueness of the disclosure reports. It may also mark a truly vulnerable file as clean (false negative), due to the incompleteness of the file database and, again, the vagueness of the reports.

At OPSWAT, we understand this and are constantly improving our file-based vulnerability assessment technology, so that it covers more vulnerabilities, while reducing false positive/negative rates. We have integrated this technology into many of the products in our MetaDefender family (such as MetaDefender Core, MetaDefender Cloud, Drive, Kiosk, ICAP Server, etc.), to help organizations provide in-depth defense of their critical networks [3].

Known Exploits

It is hard enough for sysadmins to monitor the disclosure reports of all the software the organization uses, let alone know and monitor all components inside that software. Which makes it possible for software that uses old components that contain vulnerabilities to evade detection and get inside organizations. This can make vulnerable components a big problem.

An example is CVE-2019-12280 [4], the uncontrolled library search path vulnerability of Dell SupportAssist. It allows a low-privilege user to execute arbitrary code under SYSTEM privileges and gain complete control over a machine. It originally came from a component provided by PC-Doctor to diagnose machines. Both vendors have released patches to remediate the problem.

Another example is CVE-2012-6706 [5], a critical memory corruption vulnerability that could lead to arbitrary code execution and compromise of a machine when opening a specially crafted file. It was originally reported to affect Sophos antivirus products, but was later found to come from a component named UnRAR, which handles file extraction [6].

These kinds of vulnerabilities often cannot be completely reported, since there are too many products that use the vulnerable components. Therefore, even when sysadmins know about every product in use and monitor them closely on common sources of disclosure, there is still a lot of room for an attacker to pass through.

Demo

Let's take a closer look at a case where a vulnerable component causes problems on a host. We’ll use an old version of Total Commander [7] that contains an UnRAR.DLL [8] that is affected by CVE-2012-6706 [5]. Notice that this CVE does not report Total Commander. Power users with administration privileges often use this software, so successfully exploiting the vulnerability may help an attacker use a host to take control of an organization’s network.

Demo specification:

- Operating system: Windows 10 1909 x64.

- Software: Total Commander v8.01 x86 with UnRAR library v4.20.1.488.

- Crafted data is created by Google Security Research group on Exploit-DB [9].

The memory corruption happens when Total Commander uses UnRAR.DLL to extract a specially crafted file. The attacker can then execute arbitrary code on the machine with the privilege of the Total Commander user. Sophisticated attackers may plant more seemingly legitimate but vulnerable files all over the machine for further attacks.

Recent versions of Total Commander do not have this vulnerability, since they use a newer version of UnRAR.DLL. So, as always, users should keep their software up-to-date, even if there is no disclosure report on them, and especially if they haven't performed an update for a long time (this version of Total Commander was released in 2012).

Conclusion

Software vulnerabilities allow attackers to gain access or take control of organizational resources. Two common methods to detect vulnerabilities are network-based and host-based scanning. They usually operate on a high abstraction level and may miss important information, due to changes in a computer's environment.

OPSWAT’s file-based vulnerability assessment technology operates on the file level to alert sysadmins of vulnerable software installers and components going into and out of the organization, reducing security risk both before deployment and during use. The technology has been integrated into MetaDefender products such as Core, Cloud API, Drive, Kiosk, etc. to cover a wide range of use cases.

Detecting vulnerabilities on a file-by-file basis is different from traditional methods, and has new potential, new use cases, as well as challenges. At OPSWAT, we are constantly improving our technology to overcome the challenges and help protect organizations' critical networks from ever-evolving cybersecurity threats.

References

[1] "Vulnerability (computing)," [Online].

[2] "Vulnerability scanner," [Online].

[3] "MetaDefender - Advanced Threat Prevention Platform," [Online].

[4] "CVE-2019-12280 - MetaDefender," [Online].

[5] "CVE-2012-6706 - MetaDefender," [Online].

[6] "Issue 1286 - VMSF_DELTA filter in unrar allows arbitrary memory write - Project Zero," [Online].

[7] "Total Commander - home," [Online].

[8] "WinRAR archiver - RARLAB," [Online].

[9] "unrar 5.40 - 'VMSF_DELTA' Filter Arbitrary Memory Write," [Online].