Title

Create new category

Edit page index title

Edit category

Edit link

Sizing Guide

Overview

MetaDefender Storage Security (MDSS) is developed with container technology, offering various ways to organize these networked services to suit your specific requirements. When deploying MDSS, it's essential to assess the anticipated workload and operational needs. This evaluation will guide you in selecting a deployment strategy that aligns with your performance and reliability criteria.

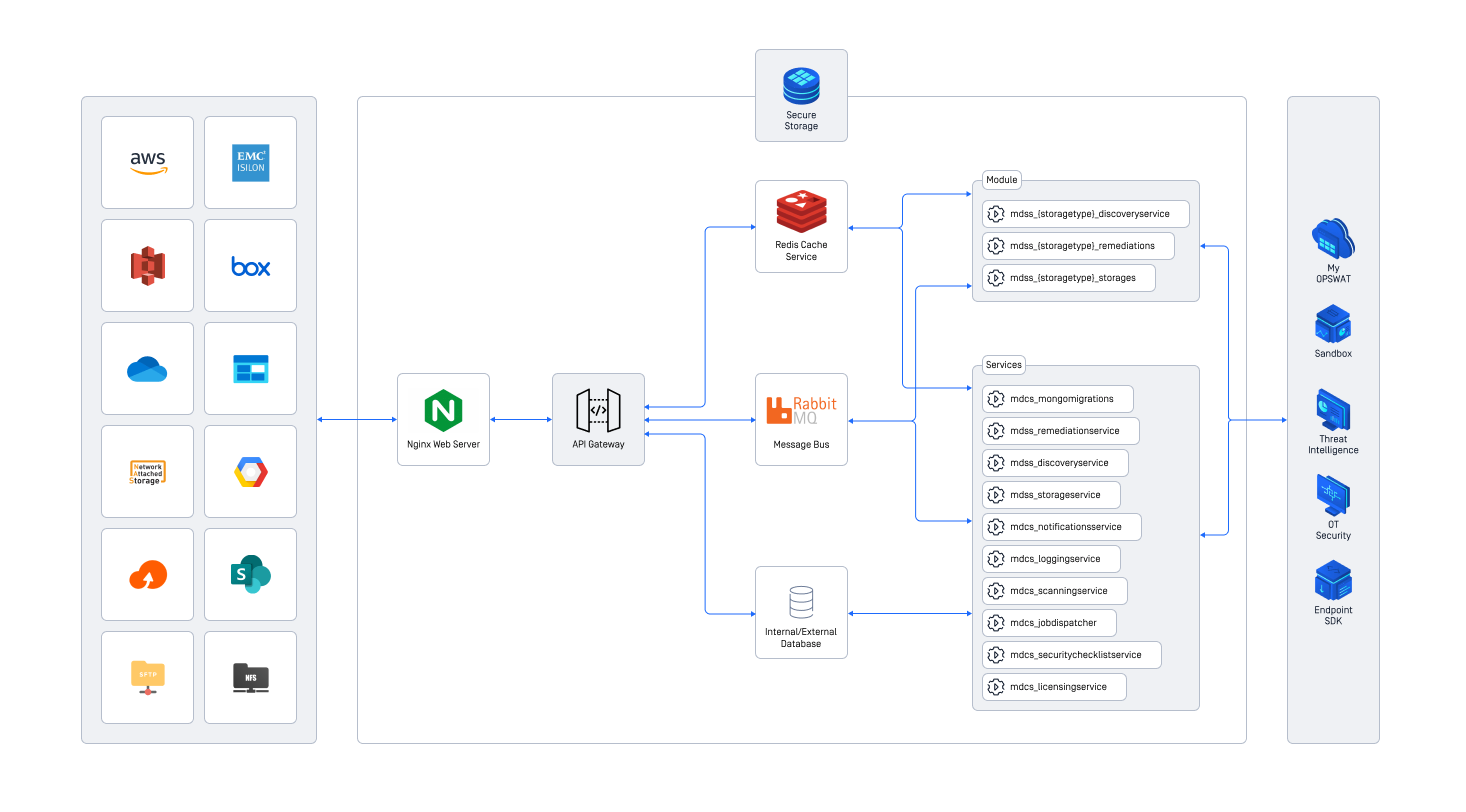

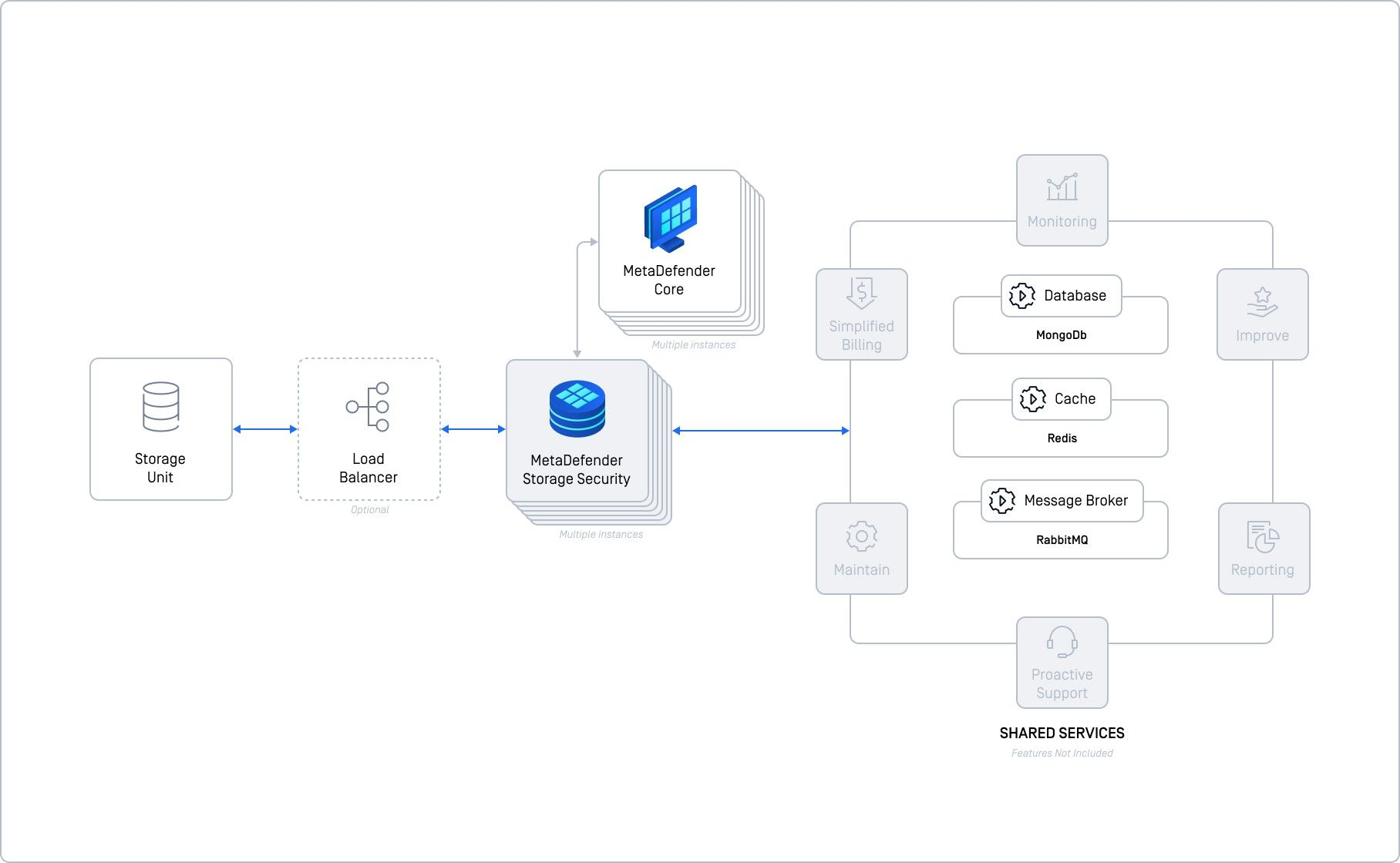

High level architecture of MetaDefender Storage Security

A note on shared services

The diagram illustrates the internal components of the Storage Security architecture and their interactions. Notably, it features several shared services such as the database (MongoDB), the Message Broker (RabbitMQ), and the Cache (Redis). These shared services are crucial for effectively scaling the architecture due to their significant impact on overall system performance. Below, we explore various deployment strategies that enhance throughput by utilizing different methods for these services. It's essential to recognize that these shared components form a stateful architecture. Therefore, any horizontal scaling must ensure that the data, or state, within these services remains accessible across all other services, even as they replicate in a stateless manner.

Additionally, this guide will demonstrate that specific technologies can facilitate an implementation tailored to certain environments. For instance, if deploying Storage Security on Amazon AWS, we recommend opting for Amazon ElastiCache as a substitute for the standard RabbitMQ technology for the Message Broker. Similar recommendations apply for the Database and Cache configurations.

Deployment Options

The table below provides a summary of the various options available for deployment.

| Small scale deployments | Medium scale deployments | Large scale deployments | ||||

|---|---|---|---|---|---|---|

| Basic deployment (single instance) | Basic deployment with self-hosted shared services | Basic deployment with managed services | Advanced deployment with self-hosted shared services | Advanced deployment with managed services | Cloud deployment with Kubernetes (k8s) | |

| Suitable for small and predictable workloads | Suitable for small workloads, offers some flexibility | Suitable for small workloads, when high availability (HA) is desired | Great at handling workload, but difficult to manage | Perfect for medium to large workloads, but without auto-scaling | Ideal for large workloads, handles daily peaks, cost optimized | |

| Scalability | ||||||

| High Availability | ||||||

| Auto-Scale Ready | ||||||

| Files / hour (Objects / hour) | 5,000 (125,000) | 20,000 (500,000) | 50,000 (1,250,000) | 100,000 (2,500,000) | 100,000 (2,500,000) | 100,000 or more |

| Recommended MD Core # | 2-4 | 4 or more | 4 or more | 4 or more | 4 or more | Tailored for each customer |

| Recommended MDSS # | 1 | 1 | 1 | 2 or more | 2 or more | Tailored for each customer |

| Infrastructure complexity |

The data presented in the table was derived from a dataset comprising files from various representative categories, including Adobe (PDF), executables (EXE, MSI), images (BMP, JPG, PNG), media (MP3, MP4), Office documents (DOC/X, PPT/X, XLS/X), as well as text and archives. This dataset consists of 5000 compressed files and 5260 uncompressed files, totaling 7728.5 MB with an average file size of 1.55 MB. To simulate larger scenarios, this dataset was replicated to create datasets of 50,000 and over 100,000 files. These results are intended as guidelines rather than precise performance guarantees due to the multitude of variables that can impact performance, such as file types, network configurations, and hardware specifications. For critical throughput requirements, OPSWAT recommends conducting site-specific benchmarks prior to deploying a production solution.

Basic deployment (single instance)

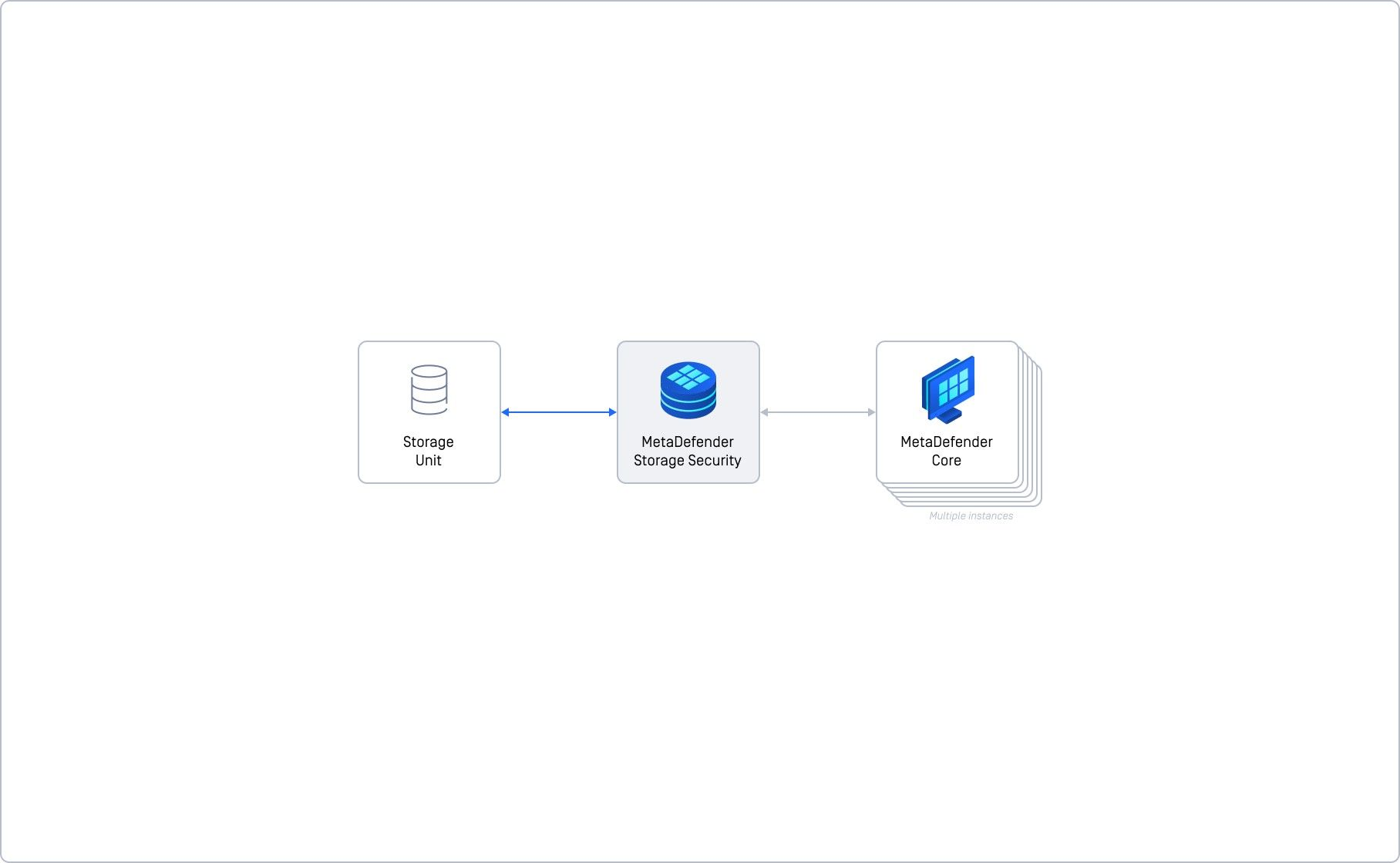

This method represents the simplest and quickest approach to deploying MetaDefender Storage Security. It is ideally suited for handling small workloads. Please note that actual performance may vary based on file sizes and types. Although this setup cannot be scaled horizontally to enhance performance or availability, it allows for reconfiguration or upgrades as part of a subsequent deployment strategy.

Given that this deployment uses a single instance with all components integrated, it offers the advantage of minimal complexity. However, it lacks scalability and redundancy, which are crucial for maintaining continuous operations. Consequently, this method is not advisable for use in production environments where high availability and scalability are essential.

More resources

Basic deployment with self-hosted shared services

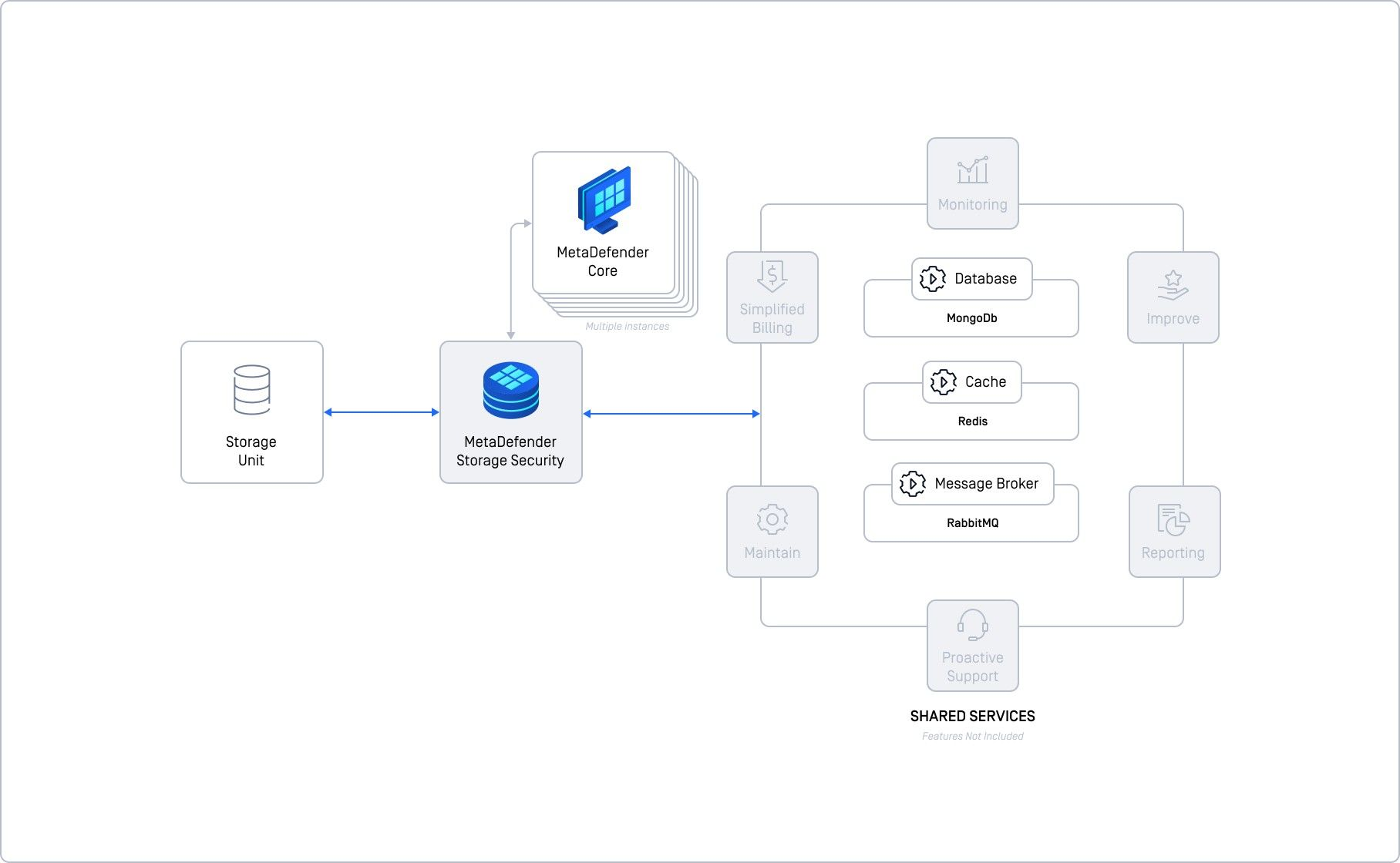

This deployment method represents a step up in complexity compared to the simplest deployment, as it involves setting up separate instances for essential shared services. These services include a database service (MongoDB), a cache service (Redis), and a message broker (RabbitMQ). While this setup demands more effort in terms of separate deployments and configurations, it significantly enhances reliability and scalability for the shared components of MetaDefender Storage Security.

Each of these shared services must be manually deployed, configured, monitored, and maintained by your team prior to integration with MetaDefender Storage Security. For instance, implementing replication at the database level is crucial to ensure high availability and prevent a single point of failure.

For optimal performance and reliability, we recommend deploying each shared service on separate machines or nodes as individual virtual machines. Alternatively, it is technically feasible to deploy all services on a single VM; however, this arrangement compromises scalability, reliability, and exposes the system to potential single points of failure.

With the shared services appropriately offloaded and supported, this deployment configuration can efficiently handle up to 20,000 files per hour, provided that sufficient resources are allocated to the database, cache, and message broker services.

Once all services are deployed and ready to be used, please follow Self-hosted shared services

More resources

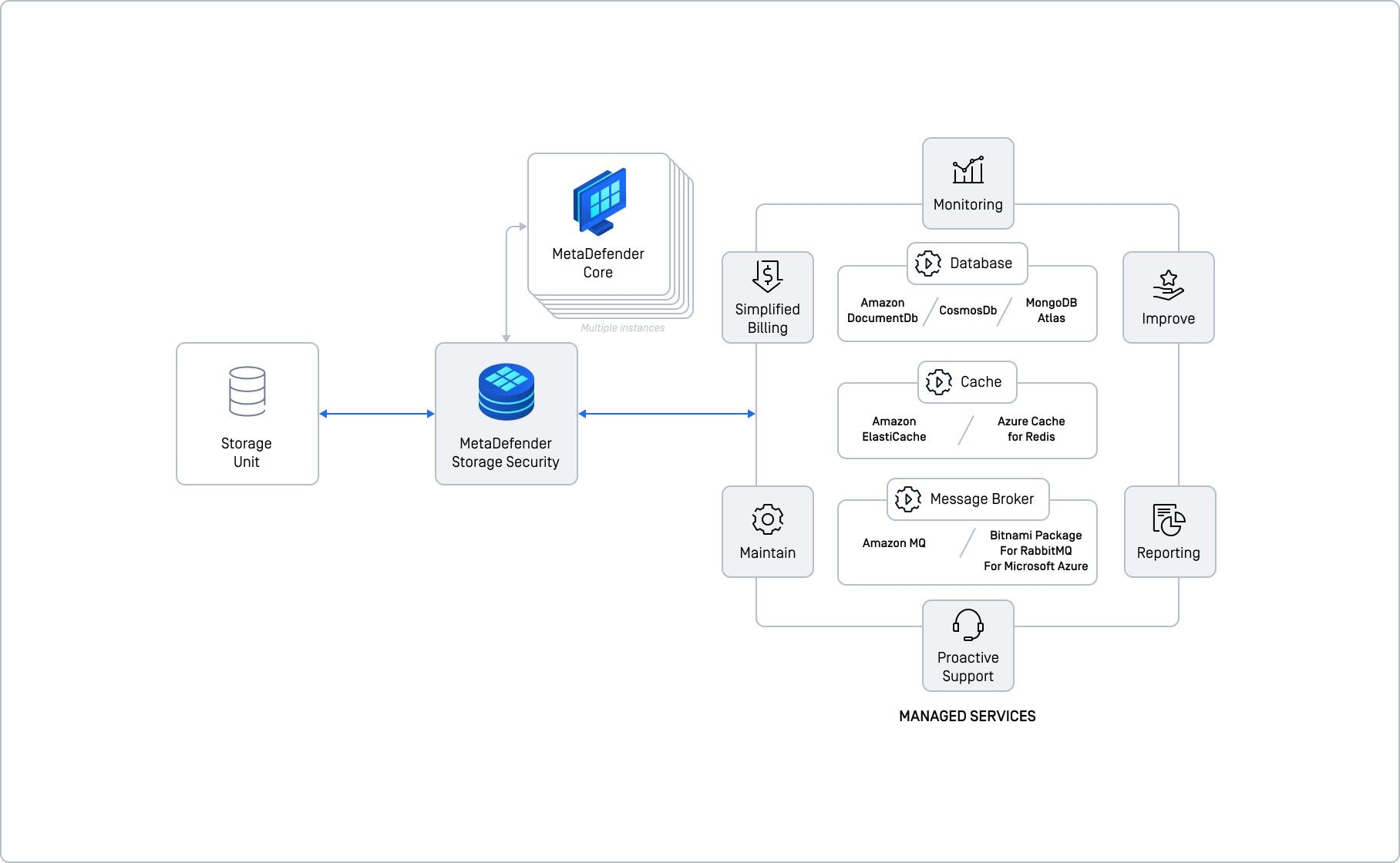

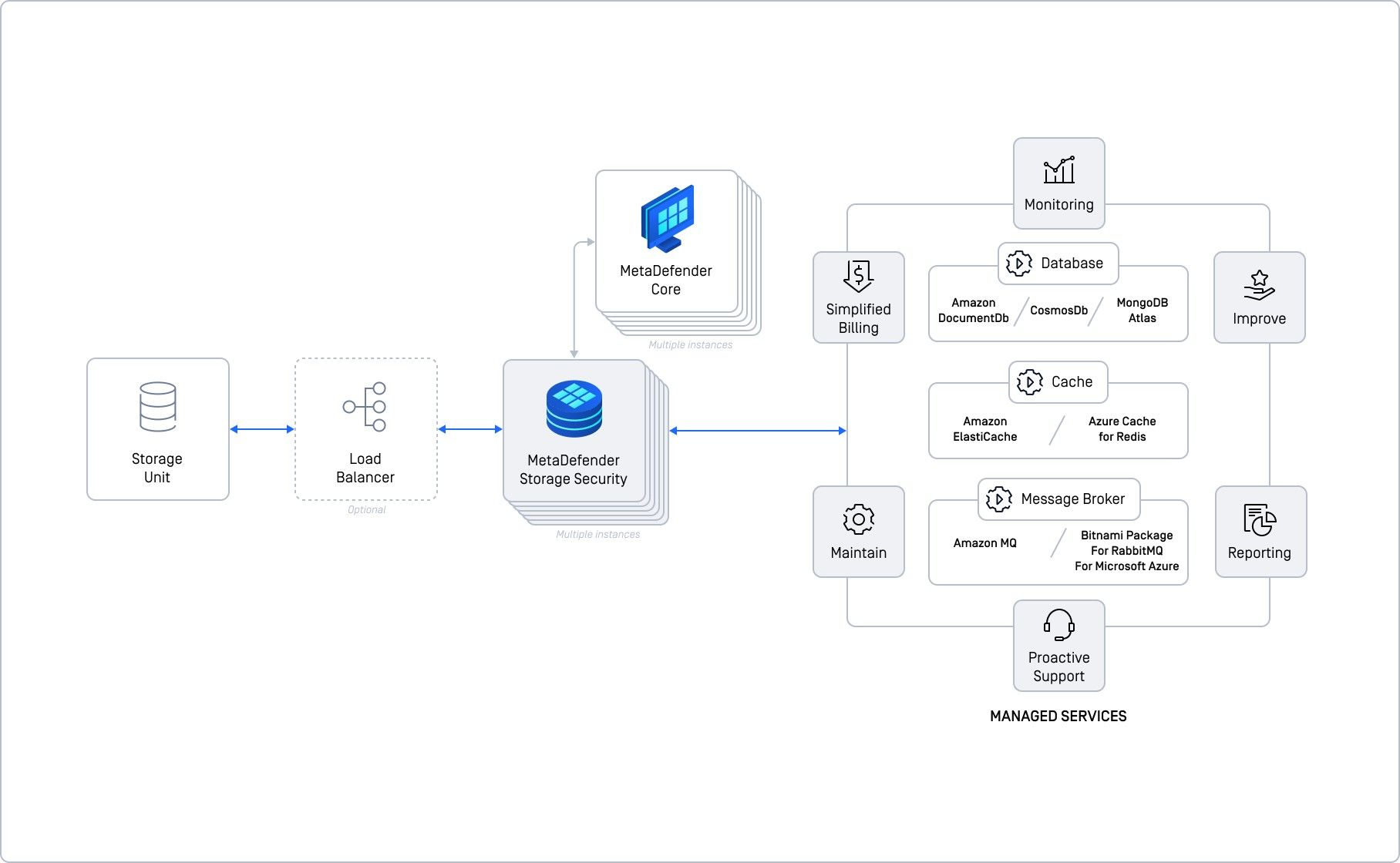

Basic deployment with managed services

This deployment strategy builds on the basic model by utilizing managed services for our shared components, offering a seamless integration for those already leveraging cloud solutions. For example, if you are an Amazon customer planning to deploy MetaDefender Storage Security on Amazon EC2, it is advantageous to use Amazon DocumentDB as your database service. In line with this approach, Amazon ElastiCache would serve as your Cache, and Amazon MQ as your Message Broker. This not only simplifies the overall workload but also provides benefits such as enhanced monitoring, reporting, maintenance, and proactive support—including billing and ongoing improvements—from your cloud provider.

Similarly, if you use Azure or Google Cloud, this model can be adapted to utilize their corresponding managed services. For more information on integrating with various cloud providers, please follow this page.

In this deployment model, redundancy and reliability are managed by the cloud provider, since the shared components are employed as managed services. This setup entails costs, but it alleviates your team from the complexities of direct management. Your primary focus will be on deploying MetaDefender Storage Security and scaling up with additional MetaDefender Core instances as needed. Based on our testing, this deployment can efficiently scale up to handle 50,000 files per hour with just one Storage Security instance and four Core instances.

Advanced deployment with self-hosted shared services

This deployment model represents a significant advancement from the previous chapter, necessitating at least two MetaDefender Storage Security instances and the same shared services, all deployed in a self-hosted manner. This approach increases complexity as it involves setting up separate instances for essential shared services including a database (MongoDB), a cache (Redis), and a message broker (RabbitMQ). This configuration demands considerable effort in terms of deployment and configuration but offers substantial improvements in reliability and scalability for the shared components of Storage Security.

Each shared service must be manually deployed, configured, monitored, and maintained by your team before integration with MDSS. Implementing measures such as database replication is critical to ensure high availability and eliminate single points of failure.

For achieving optimal performance and reliability, we recommend deploying each shared service on separate machines or nodes as individual virtual machines. With the shared services effectively offloaded and scaled, this deployment can proficiently manage up to 100,000 files per hour, assuming adequate resources are dedicated to the database, cache, and message broker services.

In our internal testing, deploying 2 instances of Storage Security connected to 4 instances of MetaDefender Core yielded substantial performance results.

Please be aware that this deployment model, while highly effective, is also the most complex to monitor and maintain due to the increased number of components deployed separately. Effective and robust monitoring systems are essential to manage this complexity and ensure continuous operation.

Once all services are deployed and ready to be used, please follow Production considerations to connect MDSS to them.

More resources

- Advanced deployments | System Requirements

- Self-hosted shared services

- Installation

- Production considerations for Unix-based deployments

- Production considerations for Windows-based deployments

This advanced deployment model extends the previous strategies by requiring at least two Storage Security instances, while employing managed services for the shared components. This approach offers seamless integration for those already using cloud solutions. For instance, if you are deploying Storage Security on Amazon EC2, using Amazon DocumentDB as your database service, Amazon ElastiCache for caching, and Amazon MQ as your message broker is highly advantageous. This configuration simplifies the overall workload and brings enhanced monitoring, reporting, maintenance, and proactive support, including billing and continuous improvements from your cloud provider.

Similarly, if you use Azure or Google Cloud, this model can be adapted to utilize their corresponding managed services. For more information on integrating with various cloud providers, please follow this page.

In this model, the cloud provider manages redundancy and reliability, as the shared components are utilized as managed services. Although this involves additional costs, it significantly reduces the complexities associated with direct management of these services. Your primary focus will center on deploying Storage Security across multiple instances to ensure scalability and augmenting your setup with additional MetaDefender Core instances as necessary.

Our testing indicates that this deployment configuration can effectively scale up to handle 100,000 files per hour, with at least two MetaDefender Storage Security instances and four MetaDefender Core instances.

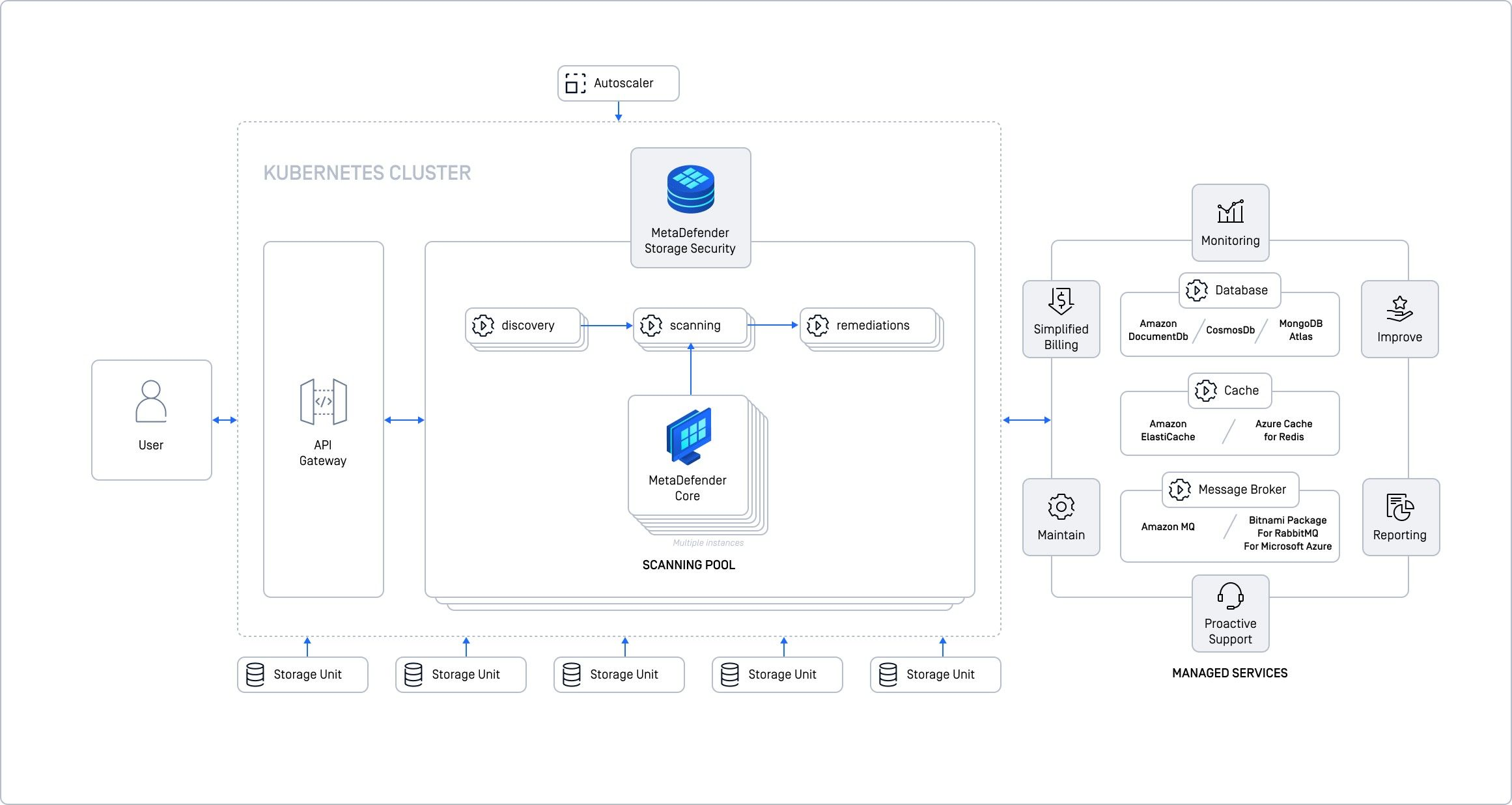

Cloud deployment with Kubernetes (k8s)

This deployment type is our recommended option when scalability, high availability, or handling a high volume of files is a priority. Kubernetes offers the flexibility to scale from a small setup, designed to manage a moderate volume of files with minimal resources, to a large-scale deployment ensuring high availability across multiple nodes without interruptions. Moreover, scaling can be dynamically adjusted based on workload or other metrics, and each component can be individually scaled to optimize resource allocation tailored to specific needs.

For this setup, we advise using managed services for the shared components such as the database, cache, and message broker. For instance, if you plan to deploy MetaDefender Storage Security on AWS EKS, using Amazon DocumentDB for your database service, Amazon ElastiCache as your cache, and Amazon MQ as your message broker will simplify the workload and bring additional benefits such as enhanced monitoring, reporting, and maintenance, along with proactive support—including billing and continuous improvements from your cloud provider.

Users of Azure or Google Cloud can adapt this model to employ their respective managed services with Azure Kubernetes Service (AKS) or Google Kubernetes Engine (GKE). For detailed guidance on integrating with various cloud providers, please follow:

Before deploying Storage Security, a Kubernetes cluster must be provisioned and prepared. This preparation includes setting up load balancing, autoscaling, ensuring persistent storage if required, and establishing connectivity to external services. Once these prerequisites are addressed, you can proceed to deploy Storage Security using our Helm chart available on GitHub - GitHub - OPSWAT/metadefender-k8s: Run MetaDefender in Kubernetes using Terraform and Helm Chart . For more detailed instructions on how to deploy and configure the product in a generic Kubernetes cluster, please refer to Kubernetes deployment.