By default, Sandbox log files are collected in the following folder from multiple system components:

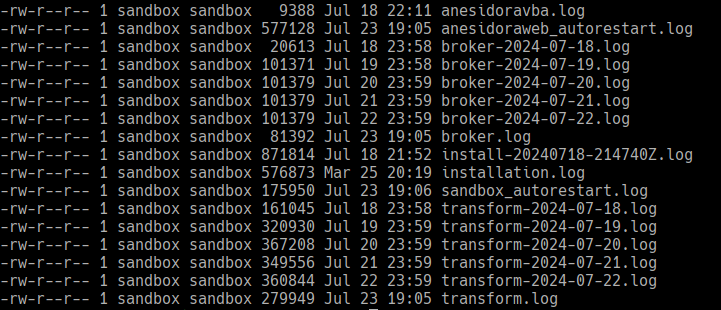

/home/sandbox/sandbox/logsA similar list of log files should be present in that folder:

Note that log rotation is also used, so multiple log files will be present for a given component (most importantly for broker and transform).

You can open any of these files in a text editor of your choice.

To view the colorized transform logs in real time, use the sblog command in your shell. This should work for all users, but a sudo password might be needed if a given user does not have read access to /home/sandbox/sandbox/logs/transform.log:

sblogTo view the colorized broker logs in real time, use the sblog command with the -b argument:

sblog -bThe implementation of sblog is the /usr/local/sbin/sblog bash script.

This script calls multitail on the given logfile using the sandbox color scheme. You might use the same command on any logfile that you would like to view in real time:

multitail -D -n 1000 -cS sandbox -i "${LOGFILE}"Logs generated by different Sandbox components

Installation logs

- logfile name: install-yyyyMMdd-HHMMssZ.log

- logfile location: <sandbox_install_folder>__/logs

- encoding: UTF-8

- logfile format: text

- content: log messages collected when running install.sh

- log message format: No strict format, the output of various tools are logged

- example log message:

Successfully upgraded MetaDefender Sandbox to: v2.0.0-StandaloneTransform logs

logfile name: transform.log and anesidoravba.log

logfile location: <sandbox_install_folder>/logs/

logging framework: Log4j

encoding: UTF-8

logfile format: text (optional JSON)

content: generic log messages

supported log levels: FATAL, ERROR, WARN, INFO

defaut log level: INFO

log rotation:

- filename format: transform -yyyy-MM-dd.log

- rotation period: daily

- maximum age: 30 days

log message format: "[%X{flow_id, uid}] %t %d %p %L [%c{1}] - %m%n"

- flow_id: primary scan identifier

- uid: component level scan identifier

- [%c{1}]: obfuscated class name

example log message:

[{flow_id=5557f50da8ba600952e99d8e, uid=9a25d15b-193a-4f6a-8151-fbc92ed9df50}] pool-5-thread-1 2024-03-18 09:08:15,411 INFO 61 [T] - Starting transform taskBroker logs

logfile name: broker.log

logfile location: <sandbox_install_folder>/logs/

logging framework: Log4j

encoding: UTF-8

logfile format: text (optional JSON)

content: generic log messages

supported log levels: FATAL, ERROR, WARN, INFO

default log level: INFO

log rotation:

- filename format: broker-yyyy-MM-dd.log

- rotation period: daily

- maximum age: 30 days

log message format: "[%X{flow_id, uid}] %t %d %p %L [%c{1}] - %m%n"

- flow_id: primary scan identifier

- uid: component level scan identifier

- [%c{1}]: obfuscated class name

example log message:

[{flow_id=5557f50da8ba600952e99d8e, uid=31c41e37-92f1-490e-ac3e-9f6fdcf0c6e5}] pool-2-thread-1 2024-03-18 09:09:05,828 INFO 254 [B] - Submitting (1662 bytes) to application serverAutorestart logs

Docker container healthcheck and autorestart log entries.

logfile name: sandbox_autorestart.log and anesidoraweb_autorestart.log

logfile location: <sandbox_install_folder>/logs

encoding: UTF-8

logfile format: text

content: autorestart log messages

log message format: “yyyy-MM-dd hh:mm:ss - %message“

- message: arbitrary message

example log message:

2024-03-06 14:00:01 - All containers are healthy.Docker container logs

Logs are created by docker for each container.

logfiles location: /var/lib/docker/containers/

- this folder has a folder per container, named by container ID

- each folder has a file {container-ID}-json.log

encoding: UTF-8

logfile format: JSON

content: generic log messages

default log level: INFO

log rotation:

- on container restart

- after reaching 1 GB size

log object format:

- log: log message

- stream: stream where message was streamed to (stderr by default)

- time: time when log message was created

log message format: "%(asctime)s %(levelname)s: %(traceable_id)s%(message)s"

- asctime: log message time

- levelname: log level (INFO, WARNING, ERROR)

- traceable_id: optional token to help trace log records, like [health:arq:scan_max_priority:long_run]

- message: log message itself

example log object:

{ "log": "2024-03-18 12:12:28,066 INFO: [health:arq:scan_max_priority:long_run] Started long healthcheck for arq:scan_max_priority queue. Executes each 11 seconds", "stream": "stderr", "time":"2024-03-18T12:12:28.067486418Z"}Nginx logs

Standard access logs generated by nginx: https://docs.nginx.com/nginx/admin-guide/monitoring/logging/

logfiles name: access.log and error.log

logfile location:

- /srv/backend/nginx/logs/access.log

- /srv/backend/nginx/logs/error.log

encoding: UTF-8

logfile format: text

content: standard access log and error log messages

example access log and error log messages:

172.20.0.1 - - [06/Aug/2024:19:36:39 +0000] "GET /api/system/info HTTP/1.1" 200 174 "-" "Python/3.10 aiohttp/3.8.4" "-"- - - [06/Aug/2024:19:36:39 +0000] "GET https://<SANDBOX-HOST>/api/system/info HTTP/1.1" 200 174 "-" "Python/3.10 aiohttp/3.8.4" 0.010172.71.167.198 - - [06/Aug/2024:19:37:01 +0000] "GET / HTTP/2.0" 200 1299 "https://<SANDBOX-HOST>/" "Mozilla/5.0+" "<IP>" 2024/02/19 14:37:16 [error] 36#36: *22 upstream timed out (110: Connection timed out) while reading response header from upstream, client: <IP>, server: , request: "POST /api/system/license/activate/online HTTP/2.0", upstream: "http://<HOST>:<PORT>/api/system/license/activate/online", host: "localhost", referrer: "https://localhost/admin/settings/integration"