Title

Create new category

Edit page index title

Edit category

Edit link

Shared DB - Windows

These results should be viewed as guidelines and not performance guarantees, since there are many variables that affect performance (file set, network configurations, hardware characteristics, etc.). If throughput is important to your implementation, OPSWAT recommends site-specific benchmarking before implementing a production solution.

Environment

Using AWS environment with the specification below:

MetaDefender Core

| OS | AWS instance type | vCPU | Memory (GB) | Network bandwidth (Gbps) | Disk type | Benchmark | |

|---|---|---|---|---|---|---|---|

| MetaDefender Core #1 | Windows Server 2022 | c5.4xlarge | 16 | 32 | Up to 10 | SSD | Amazon EC2 c5.4xlarge - Geekbench |

| MetaDefender Core #2 | Windows Server 2022 | c5.4xlarge | 16 | 32 | Up to 10 | SSD | Amazon EC2 c5.4xlarge - Geekbench |

RDS

| OS | AWS instance type | vCPU | Memory (GB) | Network bandwidth (Gbps) | Disk type |

|---|---|---|---|---|---|

| Windows Server 2022 | db.m7i.4xlarge | 16 | 64 | Up to 10 | SSD |

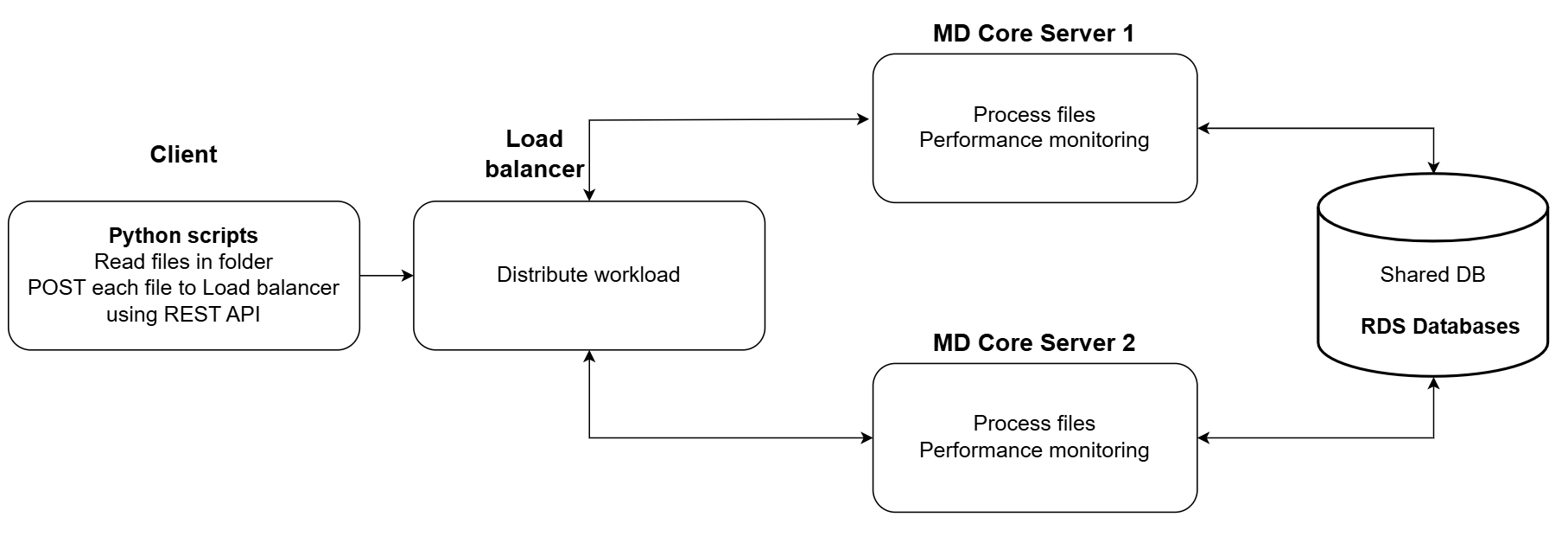

Deployment Model

Using a AWS Load Balancer to distribute files sent from the client tool to two (2) different MetaDefender Core servers applying Round Robin algorithm. With this algorithm, each MetaDefender Core server is supposed to receive same number of requests.

Client tool

A simple tool written in Python to collect files in a designated folder and submit requests to Load Balancer mentioned above.

files_to_scan = list of files to scanscan_futures =[]for file_path in files_to_scan: scan_futures.append(asyncio.create_task(self.scan_file(file_path, self.load_balancer_url))async def scan_file(self, file_path, core_url): api_url = f'{core_url}/file' with open(file_path, 'rb') as file: file_content = file.read() headers = {'Content-Type': 'application/octet-stream', 'filename': file_path} max_retries = 20 # Maximum number of retry attempts retry_delay = 120 # Delay in seconds before retrying for attempt in range(max_retries + 1): try: starttime = time.time() response = requests.post(api_url, data=file_content, headers=headers) endtime = time.time() status_code = response.status_code if status_code == 200: self.post_wait = self.post_wait + endtime - starttime self.num_post_req += 1 response_json = response.json() data_id = response_json.get('data_id') return status_code, response_json, data_id if status_code == 503: print(f"Received status code {status_code}. Retrying in {retry_delay} seconds...") await asyncio.sleep(retry_delay) else: return None, None, None except requests.RequestException as e: return None, None, None return None, None, None| OS | AWS instance type | vCPU | Memory (GB) | Network bandwidth (Gbps) | Disk type |

|---|---|---|---|---|---|

| CentOS 7 | c5.4xlarge | 16 | 32 | Up to 10 | SSD |

Dataset

Detailed information of dataset below will be used for testing:

| File category | File type | Number of files | Total size (MB) | Average file size (MB) |

|---|---|---|---|---|

| Adobe | 370 | 385 MB | 1.0 MB | |

| Executable | EXE | 45 | 309.5 MB | 6.9 MB |

| MSI | 15 | 45.75 MB | 3.1 MB | |

| Image | BMP | 80 | 515 MB | 6.4 MB |

| JPG | 420 | 237.5 MB | 0.6 MB | |

| PNG | 345 | 169 MB | 0.5 MB | |

| Media | MP3 | 135 | 865 MB | 6.4 MB |

| MP4 | 50 | 500 MB | 10.0 MB | |

| Office | DOCX | 235 | 190 MB | 0.8 MB |

| DOC | 225 | 486 MB | 2.2 MB | |

| PPTX | 365 | 860 MB | 2.4 MB | |

| PPT | 355 | 1950 MB | 5.5 MB | |

| XLSX | 340 | 283.5 MB | 0.8 MB | |

| XLS | 335 | 284.5 MB | 0.8 MB | |

| Text | CSV | 100 | 236 MB | 2.4 MB |

| HTML | 1075 | 76 MB | 0.1 MB | |

| TXT | 500 | 210 MB | 0.4 MB | |

| Archive | ZIP | Compressed files: 10 Extracted files: 270 | Compressed size: 125.5 MB Extracted size: 156.5 MB | Avg compressed size: 12.6 MB Avg extracted size: 0.6 MB |

| Summary (compressed) | 5000 | 7728.5 MB | 1.55 MB average file size | |

| Summary (extracted) | 5260 | 7759.5 MB | 1.48 MB average file size |

Product Information

Product versions:

- MetaDefender Core 5.17.0

- Engines:

- Metascan 8: Ahnlab, Avira, ClamAV, ESET, Bitdefender, K7, Quick Heal, VirIT Explorer

- Metascan 12: Metascan 8, Varist, Ikarus, Emsisoft, Tachyon

- Metascan 16: Metascan 12, NANOAV, Comodo, VirusBlokAda, Zillya!

- Deep CDR: 7.7.0

- Proactive DLP: 3.1.0

- Archive: 7.7.0

- File type analysis: 7.7.0

- File-based vulnerability assessment: 4.2.416.0

MetaDefender Core settings

General settings

- Turn off data retention

- Turn off engine update

Archive extraction settings

- Max recursion level: 99999999

- Max number of extracted files: 99999999

- Max total size of extracted files: 99999999

- Timeout: 10 minutes

- Handle archive extraction task as Failed: true

- Extracted partially: true

Metascan AV settings

- Max file size: 99999999

- Scan timeout: 10 minutes

- Per engine scan timeout: 1 minutes

Performance test results

MetaDefender Core with single engine (technology)

Summary metrics:

| Use case | Scan duration (minutes) |

Throughput (processed objects/hour) |

Avg. processing time (seconds/object) |

|---|---|---|---|

| Metascan 8 | 8.9 | 878,764 | 0.004 |

| Metascan 12 | 15.4 | 507,857 | 0.007 |

| Metascan 16 | 18.1 | 432,099 | 0.008 |

| Deep CDR | 8.8 | 888,750 | 0.004 |

| Proactive DLP | 7.3 | 1,071,370 | 0.003 |

| Vulnerability | 6.1 | 1,282,131 | 0.003 |

| Embedded Sandbox | 158.9 | 49,220 | 0.073 |

System resource utilization:

| Use case | Avg./Max CPU usage | Avg./Max RAM usage | Avg. Network speed | |||

|---|---|---|---|---|---|---|

| Core 1 | Core 2 | Core 1 | Core 2 | Core 1 | Core 2 | |

| (%) | (%) | (%) | (%) | (KB/s) | (KB/s) | |

| Metascan 8 | 81.1/99.3 | 82.6/99.2 | 41.3/42.9 | 40.1/47.8 | 3,148 | 3,925 |

| Metascan 12 | 82.9/99.7 | 84.4/99.8 | 44.9/50.4 | 46.0/53.8 | 3,115 | 2,840 |

| Metascan 16 | 88.9/99.4 | 84.9/99.3 | 48.3/53.1 | 50.8/56.0 | 3,485 | 2,812 |

| Deep CDR | 83.5/99.5 | 81.8/99.4 | 42.1/50.8 | 42.9/49.8 | 3,576 | 2,985 |

| Proactive DLP | 56.4/98.8 | 57.9/99.0 | 40.8/45.4 | 39.7/41.4 | 6,440 | 6,321 |

| Vulnerability | 70.1/99.0 | 68.8/99.2 | 38.3/41.7 | 41.8/43.6 | 10,860 | 9,219 |

| Embedded Sandbox | 40.9/98.7 | 41.7/98.4 | 41.1/54.3 | 44.5/52.8 | 1,968 | 1,753 |

MetaDefender Core with common engine packages

Summary metrics:

| Use case | Scan duration (minutes) |

Throughput (processed objects/hour) |

Avg. processing time (seconds/object) |

|---|---|---|---|

| Metascan 8 + Deep CDR | 14.3 | 544,828 | 0.007 |

| Metascan 8 + Deep CDR + Proactive DLP |

14.5 | 538,636 | 0.007 |

| Metascan 8 + Deep CDR + Proactive DLP + Vulnerability |

16.1 | 486,986 | 0.007 |

| Metascan 8 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

178.0 | 43,938 | 0.082 |

| Metascan 12 + Deep CDR | 19.8 | 394,452 | 0.009 |

| Metascan 12 + Deep CDR + Proactive DLP |

20.1 | 389,395 | 0.009 |

| Metascan 12 + Deep CDR + Proactive DLP + Vulnerability |

20.6 | 374,421 | 0.01 |

| Metascan 12 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

179.5 | 43,564 | 0.083 |

| Metascan 16 + Deep CDR | 21.9 | 356,391 | 0.01 |

| Metascan 16 + Deep CDR + Proactive DLP |

23.8 | 327,341 | 0.01 |

| Metascan 16 + Deep CDR + Proactive DLP + Vulnerability |

24.4 | 320,960 | 0.01 |

| Metascan 16 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

180.9 | 43,236 | 0.083 |

System resource utilization:

| Use case | Avg./Max CPU usage | Avg./Max RAM usage | Avg. Network speed | |||

|---|---|---|---|---|---|---|

| Core 1 | Core 2 | Core 1 | Core 2 | Core 1 | Core 2 | |

| (%) | (%) | (%) | (%) | (KB/s) | (KB/s) | |

| Metascan 8 + Deep CDR |

89.5/99.8 | 90.2/99.8 | 45.8/51.6 | 45.3/52.6 | 4,835 | 5,040 |

| Metascan 8 + Deep CDR + Proactive DLP |

92.1/99.8 | 91.9/99.9 | 45.3/51.4 | 44.9/52.0 | 5,187 | 5,018 |

| Metascan 8 + Deep CDR + Proactive DLP + Vulnerability |

90.6/99.9 | 90.7/99.8 | 45.4/51.5 | 45.8/51.7 | 3,894 | 3,787 |

| Metascan 8 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

50.9/99.3 | 49.8/99.4 | 50.8/61.3 | 50.9/60.8 | 1,996 | 1,844 |

| Metascan 12 + Deep CDR |

88.5/99.8 | 88.9/99.7 | 48.9/53.8 | 48.0/54.1 | 2,946 | 3,045 |

| Metascan 12 + Deep CDR + Proactive DLP |

89.0/99.8 | 87.4/99.8 | 49.8/54.2 | 49.2/53.7 | 2,164 | 2,034 |

| Metascan 12 + Deep CDR + Proactive DLP + Vulnerability |

88.3/99.8 | 88.8/99.7 | 46.1/55.7 | 47.3/56.8 | 2,216 | 2,154 |

| Metascan 12 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

52.1/99.2 | 51.5/99.3 | 51.3/63.8 | 52.6/62.1 | 2,008 | 1,954 |

| Metascan 16 + Deep CDR |

87.9/99.7 | 88.6/99.5 | 54.3/65.7 | 54.7/65.0 | 2,079 | 2,132 |

| Metascan 16 + Deep CDR + Proactive DLP |

80.3/99.5 | 79.4/98.7 | 55.0/60.7 | 55.3/60.4 | 2,860 | 2,704 |

| Metascan 16 + Deep CDR + Proactive DLP + Vulnerability |

83.9/99.6 | 81.4/99.6 | 53.2/61.9 | 52.7/62.5 | 2,032 | 2,218 |

| Metascan 16 + Deep CDR + Proactive DLP + Vulnerability + Embedded Sandbox |

53.8/99.4 | 53.6/99.6 | 53.0/63.7 | 54.9/65.0 | 1,843 | 1,566 |

Recommendations

Controlling total processing time of each MD Core server:

In this deployment model, we should organize and send files in the way that it best utilizes the load of each MD Core server. It is not a good practice if one Core server is free while the other one is busy. By optimizing the distribution of files, we can ensure that each Core server is utilized efficiently, thereby improving overall system performance. Furthermore, this approach can help prevent bottlenecks and minimize the chances of system overload.

Adding proper number of MD Core servers to the cluster:

Adding more Core servers to this model will increase more load on the shared database. When adding a new MD Core server, users should monitor performance of database server such as memory/CPU consumption, disk usage, network bandwidth, request response time and so on… to see if it still can handle the load. This is important in order to maintain optimal performance and ensure that the database server can continue to efficiently serve the needs of the system.

Optimizing database server for better performance:

Continuing to add more Core servers to this model may result in increased strain on the shared database. As such, it is crucial to ensure that the database is optimized to handle the additional load effectively. Users can consider adjusting default database settings of PostgresSQL to optimize for more data load if needed. Here is where we can adjust PostgresSQL database settings: <PostgreSQL install location\version>\data\postgresql.conf.

Besides that, MD Core also supports a parameter (db_connection) for users to specify max connections that MD Core can handle, take a look at this guideline: MetaDefender Configuration.